AI - AI

AIML - AI

Table of contents:

- AIML - AI

ref:

- OWAPS Top10 for LLM v1

- https://www.freecodecamp.org/news/large-language-models-and-cybersecurity/

- https://www.experts-exchange.com/articles/38220/Ensuring-the-Security-of-Large-Language-Models-Strategies-and-Best-Practices.html

- https://docs.whylabs.ai/docs/integrations-llm-whylogs-container

- https://hackernoon.com/security-threats-to-high-impact-open-source-large-language-models

- https://a16z.com/emerging-architectures-for-llm-applications/

- Examining Zero-Shot Vulnerability Repair with Large Language Models

- medusa

- awesome-generative-ai

- Google’s Secure AI Framework

- The Foundation Model Transparency Index

Link:

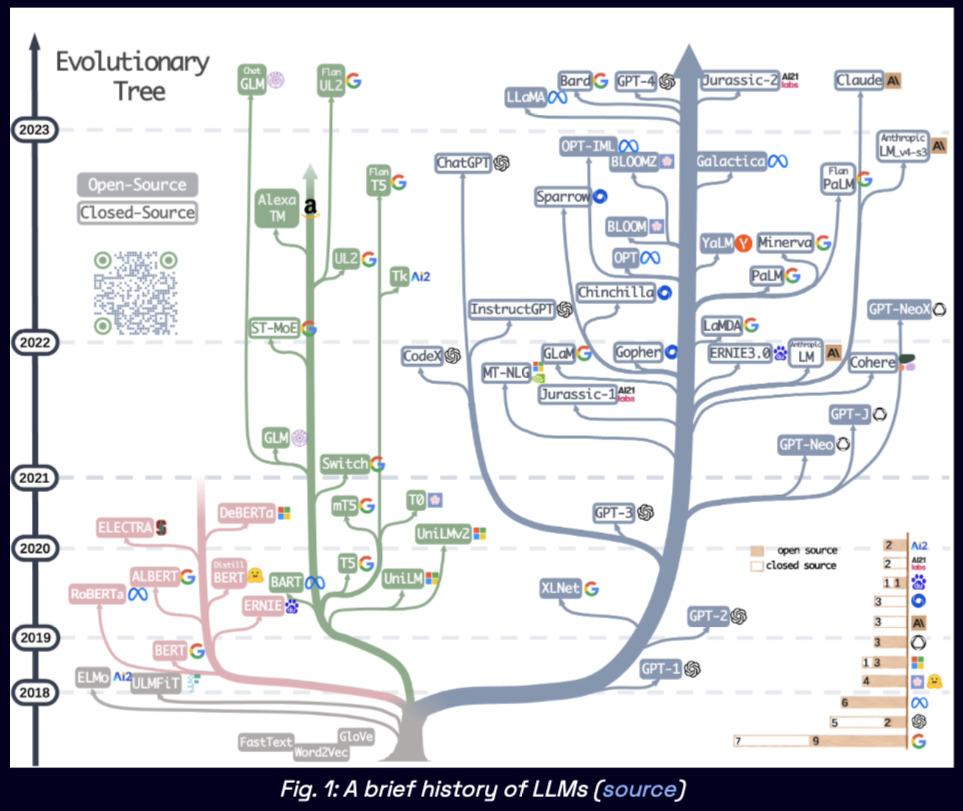

Overview

Research in artificial intelligence is increasing at an exponential rate. It’s difficult for AI experts to keep up with everything new being published, and even harder for beginners to know where to start.

- Transformers Neural Network

- After the big success of

Transformers Neural Network, it has been adapted to manyNatural Language Processing (NLP)tasks (such as question answering, text translation, automatic summarization)

“AI Canon”

- a curated list of resources we’ve relied on to get smarter about modern AI

- because these papers, blog posts, courses, and guides have had an outsized impact on the field over the past several years.

Data pipelines

- Databricks

- Airflow

- Unstructured

Embedding model

- OpenAI

- Cohere

- Hugging Face

Vector database

- Pinecone

- Weaviate

- ChromaDB

- pgvector

Playground

- OpenAI

- nat.dev

- Humanloop

Orchestration

- Langchain

- LlamaIndex

- ChatGPT

APIs/plugins

- Serp

- Wolfram

- Zapier

LLM cache

- Redis

- SQLite

- GPTCache

Logging / LLMops

- Weights & Biases

- MLflow

- PromptLayer

- Helicone

Validation

- Guardrails

- Rebuff

- Microsoft Guidance

- LMQL

App hosting

- Vercel

- Steamship

- Streamlit

- Modal

LLM APIs (proprietary)

- OpenAI

- Anthropic

LLM APIs (open)

- Hugging Face

- Replicate

Cloud providers

- AWS

- GCP

- Azure

- CoreWeave

Opinionated clouds

- Databricks

- Anyscale

- Mosaic

- Modal

- RunPod

OpenSource

- hugging face

- OpenAI

- Generative AI (answers for everything)

Programming

- python

- panda

AI modeling

- pyTorch

- Tensor flow (Google)

ML platforms

- Jupyter Notebooks

Time series

- Forecasting and predictive Analytics

Use case

- Supply Chain Management with GenAI

OpenSource -> fine tuning -> custom result

AI

- Artificial Intelligence refers to

the ability of computers to perform tasks that typically require human-level intellect. AI is useful in many contexts, from automation to problem solving and merely trying to understand how humans think.

But it is important to note that AI is only concerned with human intelligence for now – it could possibly go beyond that.

Many people correlate the word ‘Intelligence’ with only ‘Human Intelligence’. Just because a chicken may not be able to solve a mathematical equation doesn’t mean it won’t run when you chase it. It is ‘Intelligent’ enough to know it doesn’t want you to catch it 🐔🍗.

Intelligence spans a much wider spectrum, and practically expands to any living thing that can make decisions or carry out actions autonomously, even plants.

Divisions of AI

Artificial Intelligence is

centered around computers and their ability to mimic human actions and thought processes.Programming and experiments have allowed humans to produce ANI systems. These can do things like classifying items, sorting large amounts of data, looking for trends in charts and graphs, code debugging, and knowledge representation and expression. But computers don’t think like humans, they merely mimic humans.

This is evident in voice assistants such as

Google’s Assistant, Apple’s Siri, Amazon’s Alexa, and Microsoft’s Cortana. They are basic ANI programs that add ‘the human touch’. In fact, people are known to be polite to these systems simply because they combine computerized abilities with a human feel.These assistants have gotten better over the years but fail to reach high levels of sophistication when compared to their AGI counterparts.

There are two major divisions of AI:

Artificial Narrow Intelligence (ANI)

- focused on a small array of similar tasks or a small task that is programmed only for one thing.

- ANI is not great in dynamic and complex environments and is used in only areas specific to it.

- Examples include self-driving cars, as well as facial and speech recognition systems.

Artificial General Intelligence (AGI)

- focused on a wide array of tasks and human activities.

- AGI is currently theoretical and is proposed to adapt and carry out most tasks in many dynamic and complex environments.

- Examples include J.A.R.V.I.S from Marvel’s Iron Man and Ava from Ex-Machina.

Traditional AIML vs GenAI

Traditional AIML

- good at identify pattern

- learning from the pattern

- limit success with close supervised learning of very large amount of data

- must have human involved

GenAI

- produces ‘content’ (text, image, music, art, forecasts, etc…)

use ‘transformers’ (Encoders/Decoders) based on pre-trained data using small amount of fine tuning data

- encode and decode at the same time

- less data and faster

- GenAI use small data and uses encoders and decoders and Transformers to take that smaller data and be able to use it for other types of models. (Pre training)

- then add on top of it small amounts of fine tuning data

- and then get a a training model.

- As perceptions, not neurons.

- encode and decode at the same time

- Generative AI is a subset of traditional machine learning.

- And the generative AI machine learning models have learned these abilities by

finding statistical patterns in massive datasets of contentthat was originally generated by humans.

- And the generative AI machine learning models have learned these abilities by

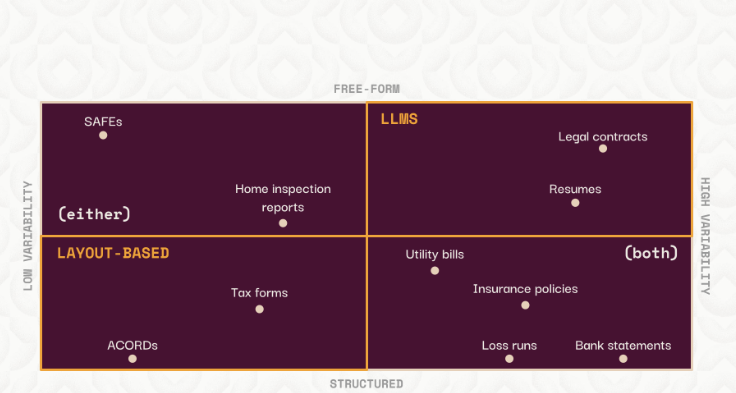

choosing between LLMs or layout-based Traditional AIML1

- recommends using LLM prompts for free-form, highly variable documents

- layout-based or “rule-based” queries for structured, less-variable documents.

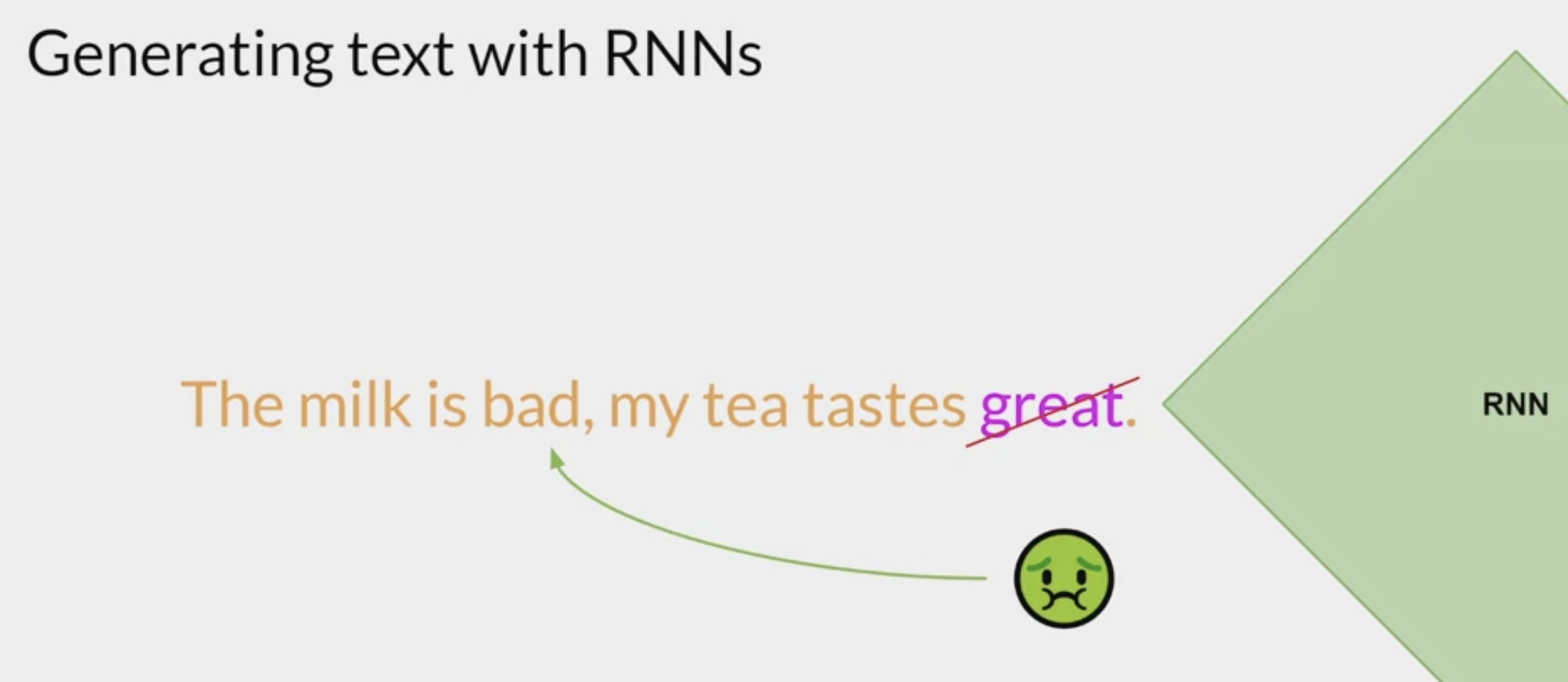

RNN - Recurrent neural networks

generative algorithms are not new.

recurrent neural networks - RNNs

- Previous generations of language models made use of an architecture called RNNs.

RNNs were limited by the amount of

compute and memoryneeded to perform well at generative tasks.- With just one previous words seen by the model, the prediction can’t be very good.

- scale the RNN implementation to be able to see more of the preceding words in the text,

- significantly scale the resources that the model uses.

- As for the prediction, Even though scale the model, it still hasn’t seen enough of the input to make a good prediction.

- To successfully predict the next word,

- models need to see more than just the previous few words.

- Models needs to have an understanding of the whole sentence or even the whole document.

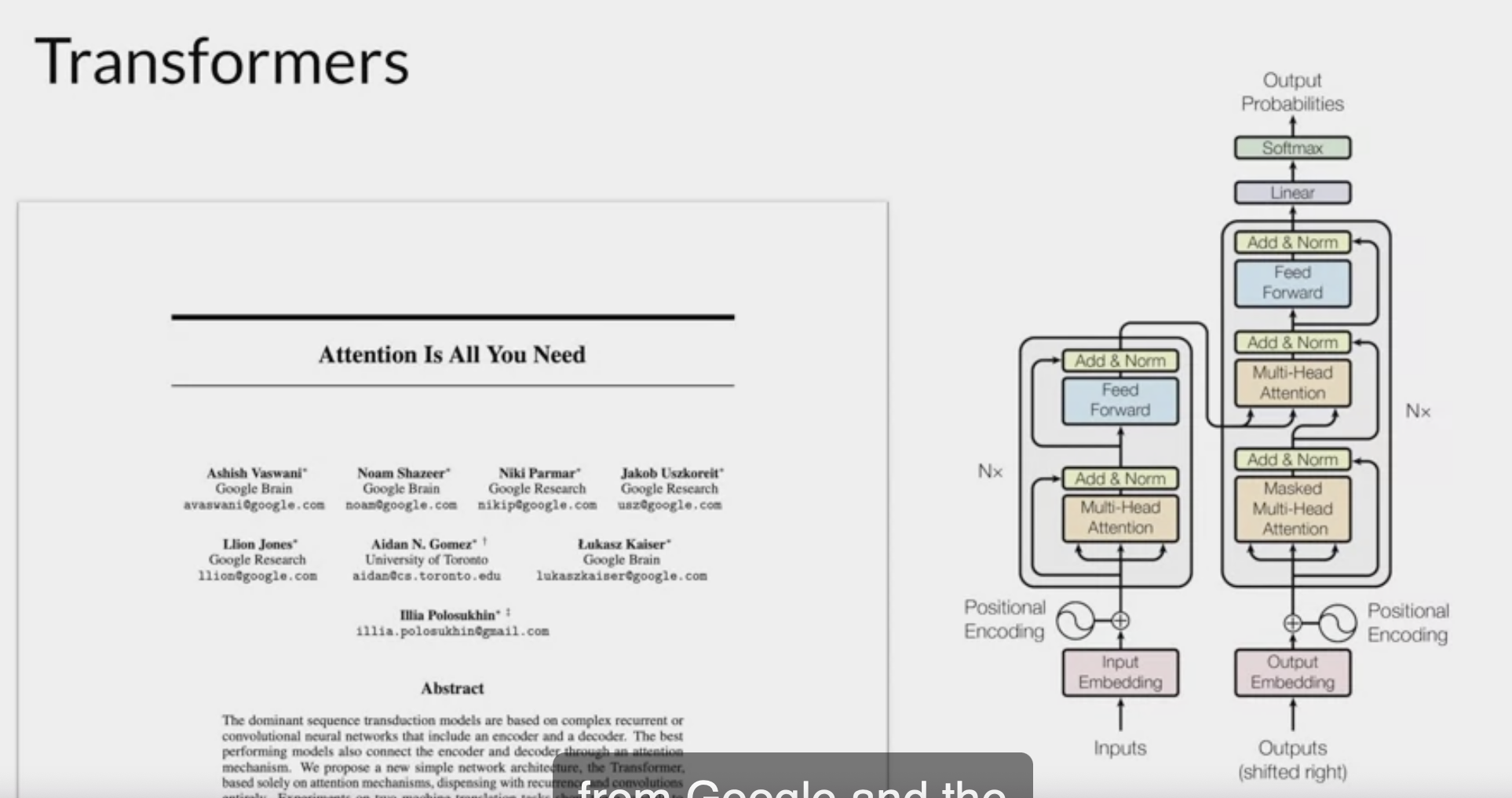

How can an algorithm make sense of human language if sometimes we can’t? in 2017, after the publication of this paper,

Attention is All You Need, from Google and the University of Toronto, everything changed. The transformer architecture had arrived.

- It can be scaled efficiently to use multi-core GPUs,

- parallel process input data, making use of much larger training datasets, and crucially,

- it’s able to learn to pay attention to the meaning of the words it’s processing.

Attention is all you need

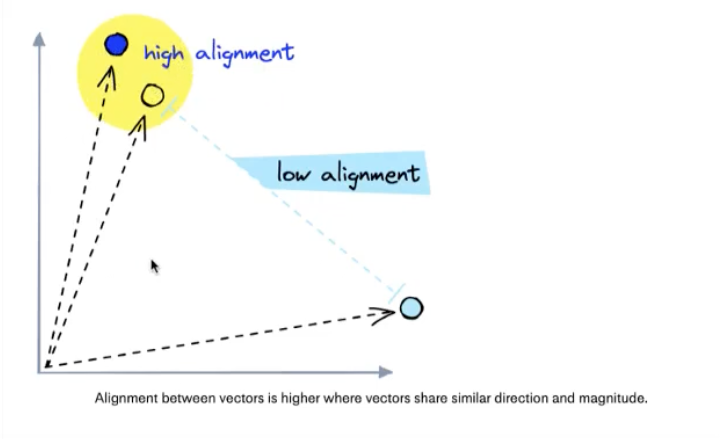

High alignment

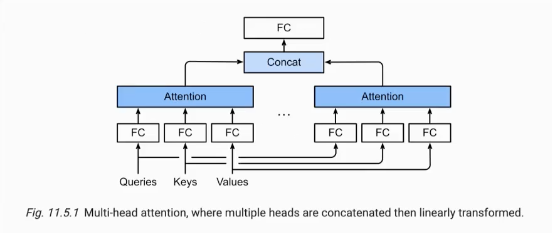

Multi-Headed Attention

名词解释

- LLM: Large Language Model,大型语言模型。

- PLM: Pretrain Language Model,预训练语言模型。

- RL: Reinforcement Learning,强化学习。

- SFT: Supervised Fine-Tuning,有监督微调。

ICL: In-Context Learning,上下文学习。

对比学习:

- 自监督学习(Self-supervised learning)可以避免对数据集进行大量的标签标注。

- 把自己定义的伪标签当作训练的信号,然后把学习到的表示(representation)用作下游任务里。

- 最近,对比学习被当作自监督学习中一个非常重要的一部分,被广泛运用在计算机视觉, 自然语言处理等领域。

- 它的目标是: 将一个样本的不同的, 增强过的新样本们在嵌入空间中尽可能地近,然后让不同的样本之间尽可能地远。

- SimCSE《SimCSE: Simple Contrastive Learning of Sentence Embeddings》是基于对比学习的表示学习方法,即采用对比学习的方法,获取更好的文本表征。

- SimCSE 细节可参考论文精读-SimCSE。

- Fine-Tuning : 微调。

- Prompt-Tuning: 提示微调。

Instruction-Tuning: 指示/指令微调。

- NLU: Natural Language Understanding,自然语言理解。

- NLG: Natural Language Generation,自然语言生成。

- CoT: Chain-of-Thought,思维链。

OOV:

- OOV 问题是 NLP 中常见的一个问题,其全称是 Out Of Vocabulary 超出词表外的词。

- 定义:

- 在自然语言处理过程中,通常会有一个字词库(vocabulary)。

- 这个 vocabulary 或者是提前加载的,或者是自己定义的,或者是从当前数据集提取的。

- 假设通过上述方法已经获取到一个 vocabulary,但在处理其他数据集时,发现这个数据集中有一些词并不在现有的 vocabulary 中,这时称这些词是 Out-Of-Vocabulary,即 OOV;

- 解决方法:

- Bert 中解决 OOV 问题。如果一个单词不在词表中,则按照 subword 的方式逐个拆分 token,如果连逐个 token 都找不到,则直接分配为[unknown]。

- shifted right: 指的是 Transformer Decoder 结构中,decoder 在之前时刻的一些输出,作为此时的输入,一个一个往右移。

- 重参数化: 常规思想: 对于网络层需要的参数是 $\Phi$,训练出来的参数就是 $\Phi$。重参数化方法: 训练时用的是另一套不同于 $\Phi$的参数,训练完后等价转换为 $\Phi$用于推理。

- PPL: 困惑度(Perplexity),用于评价语言模型的好坏。

- FCNN: Fully connected neural network,全连接神经网络。

- FNN: Feedforward neural network,前馈神经网络。

- DNN: Deep neural network,深度神经网络。

- MLP: Multi-layer perceptron neural networks,多层感知机。

- RM: Reward Model,奖励模型。

- PPO,Proximal Policy Optimization,近端策略优化,简单来说,就是对目标函数通过随机梯度下降进行优化。

- Emergent Ability: 很多能力小模型没有,只有当模型大到一定的量级之后才会出现。这样的能力称为涌现能力。

- AutoRegression Language Model: 自回归语言模型。

- Autoencoder Language Model: 自编码语言模型。

- CLM: Causal language modeling,因果语言建模,等价于 AutoRegression Language Model。

- AIGC: Artificial Intelligence Generated Content,生成式人工智能。

AGI: Artificial General Intelligence,通用人工智能。

- Bert;自编码模型,适用于 NLU(预训练任务主要挖掘句子之间的上下文关系) [^—步步走进Bert]

- GPT;自回归模型,适用于 NLG(预训练任务主要用于生成下文),有关 GPT 系列的理论知识,可参考本文的 GPT 系列章节;

GenAI

With the rise in popularity of Foundation Models, new models and tools are released almost every week and yet

- 就目前的发展趋势而言,大模型的训练基本就是按照Pretrain, Instruction-Tuning, RLHF三步走模式进行,因此技术上基本没什么问题,主要瓶颈存在于算力

对于中文大模型来说,获取高质量中文数据也是个问题。国内能耗费大规模成本进行大模型训练的厂商屈指可数

- 未来的发展趋势,可能有以下四个方向:

- 统一大模型: 头部企业逐步迭代出可用性很强的大语言模型(千亿级别),开放 API 或在公有云上供大家使用;

- 垂直领域模型: 部分企业逐步迭代出在相关垂直领域可用性很强的大语言模型(百亿级别),自用或私有化提供客户使用;

- 并行训练技术: 全新或更具可用性, 生态更完整的并行训练技术/框架开源,满足大部分有训练需求的企业/个人使用,逐步实现人人都能训练大模型;

- 颠覆: 或许某一天,横空出世的论文推翻了目前的大模型发展路线,转而证明了人们更需要的是另一种大模型技术,事情就会变得有意思了。

Large Language Model

“… a language model is a Turing-complete weird machine running programs written in natural language; when you do retrieval, you are not ‘plugging updated facts into the AI’, you are actually downloading random new unsigned blobs of code from the Internet (many written by adversaries) and casually executing them on the LM with full privileges. This does not end well.” - Gwern Branwen on LessWrong

a deep learning model which consists of a

neural networkwith billions of parameters, trained on distinctively large amounts of unlabelled data using self-supervised learning.At the core of all AI are algorithms. Algorithms are procedures or steps to carry out a specific task. The more complex the algorithm, the more tasks can be carried out and the more widely it can be applied. The aim of AI developers is to find the most complex algorithms that can solve and perform a wide array of tasks.

The procedure to create a basic fruit recognition model using an simple analogy:

- There are two people: A teacher and a bot creator

- The bot creator creates random bots, and the teacher teaches and tests them on identifying some fruits

- The bot with the highest test score is then sent back to the creator as a base to make new upgraded bots

- These new upgraded bots are sent back to the teacher for teaching and testing, and the one with the highest test score is sent back to the bot creator to make new better bots.

- This is an oversimplification of the process, but nevertheless it relays the concept. The Model/Algorithm/Bot is continuously trained, tested, and modified until it is found to be satisfactory. More data and higher complexity means more training time required and more possible modifications.

- the developer of the model can tweak a few things about the model but may not know how those tweaks might affect the results.

A common example of this are neural networks, which have hidden layers whose deepest layers and workings even the creator may not fully understand.

Self-supervised learning means that rather than the teacher and the bot creator being two separate people, it is one highly skilled person that can both create bots and teach them.

- This makes the process much faster and practically autonomous.

- The result is a bot or set of bots that are both sophisticated and complex enough to recognise fruit in dynamic and different environments.

In the case of LLMs, the data here are human text, and possibly in various languages. The reason why the data are large is because the LLMs take in huge amounts of text data with the aim of finding connections and patterns between words to derive context, meaning, probable replies, and actions to these text.

The results are models that seem to understand language and carry out tasks based on prompts they’re given.

Tuning 技术依赖于 LLM 的发展,同时也在推动着 LLM 的发展。

- 通常,LLM 指的是包含数百亿(或更多)参数的语言模型,这些模型在大量的文本数据上训练。

Features of LLMs

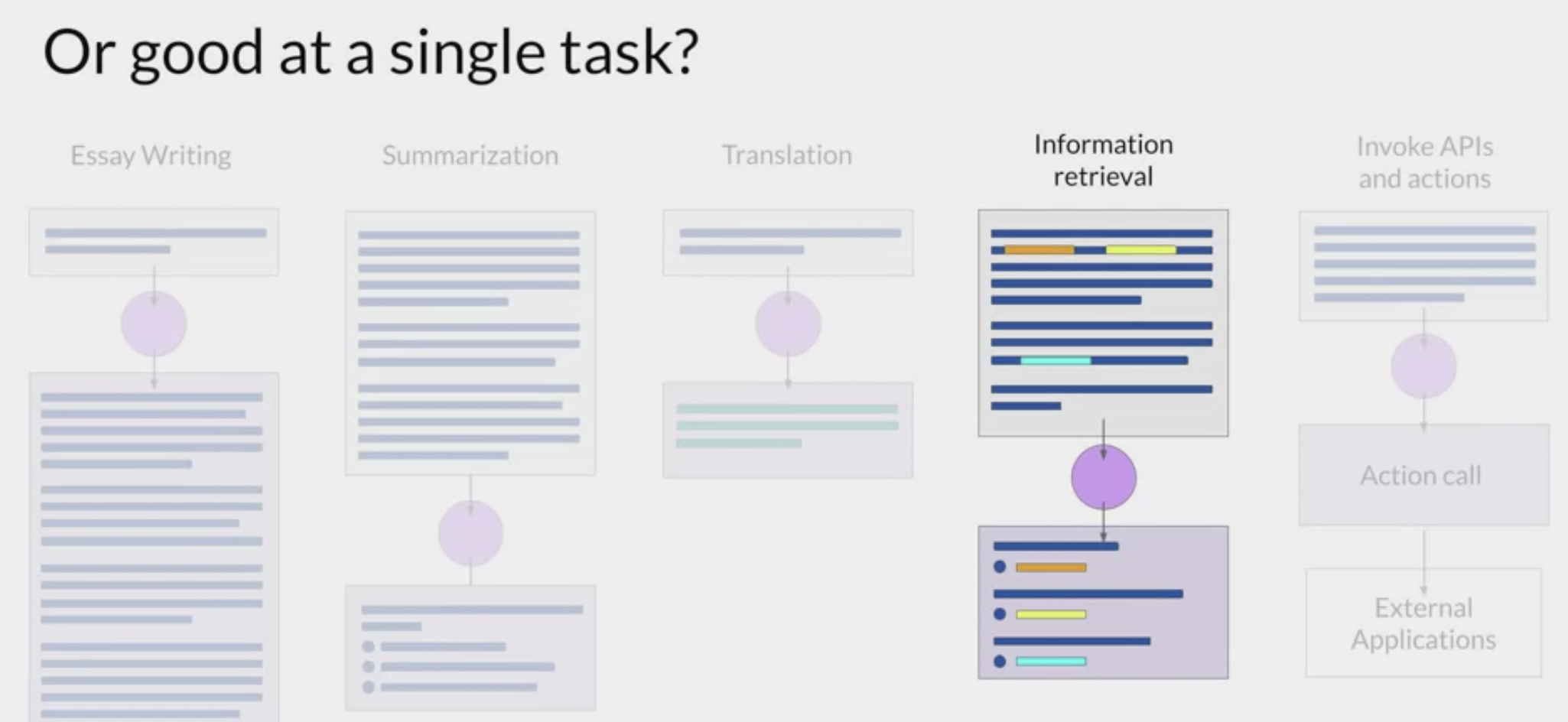

Information Retrieval

Translation

Text summarization

Invoke actions from text

- to invoke Apis, or some actions from elsewhere,

- connecting to resources that are based on the Internet.

Translation

- LLMs that are trained on an array of languages rather than just one can be used for translation from one language to another.

- It’s even theorised that large enough LLMs can find patterns and connections in other languages to derive meaning from unknown and lost languages, despite not knowing what each individual word may mean.

Automating Mundane Tasks 自动化日常任务

Task automation has always been a major aim of AI development. Language models have always been able to carry out syntax analysis, finding patterns in text and responding appropriately.

Large language models have an advantage with semantic analysis 语义分析, enabling the model to understand the underlying meaning and context, giving it a higher level of accuracy.

This can be applied to a number of basic tasks like

text summarising, text rephrasing, and text generation.

Emergent Abilities 新兴能力

Emergent Abilities are

unexpected but impressiveabilities LLMs have due to the high amount of data they are trained on.These behaviours are usually discovered when the model is used rather than when it is programmed.

Examples include multi-step arithmetic, taking college-level exams, and chain-of-thought prompting. 思维链提示

Drawbacks of LLMs

LLM Subject

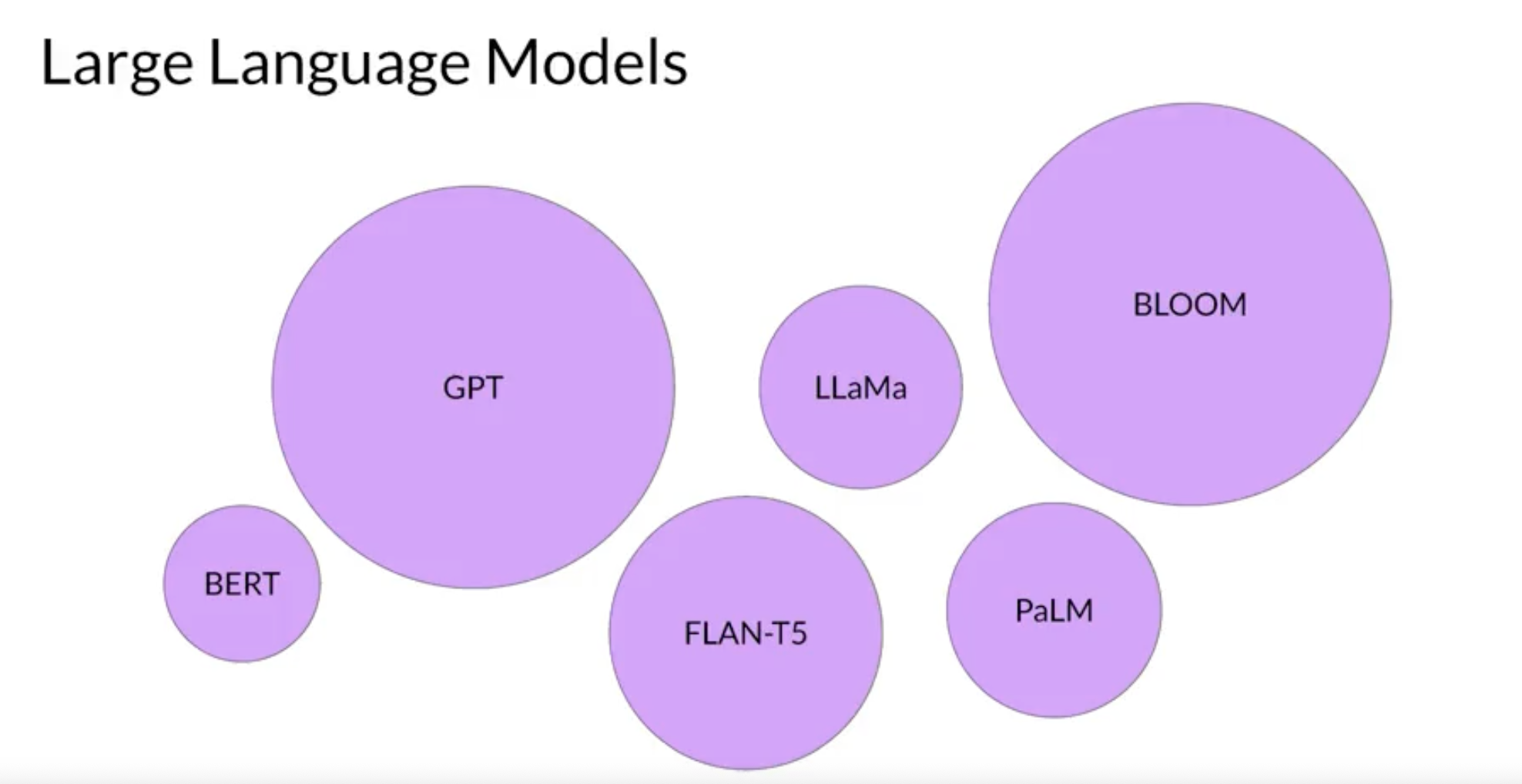

Large language models

- Large language models have been trained on trillions of words over many weeks and months, and with large amounts of compute power.

- These foundation models with billions of parameters, exhibit emergent properties beyond language alone, and researchers are unlocking their ability to break down complex tasks, reason, and problem solve.

foundation models (base models)

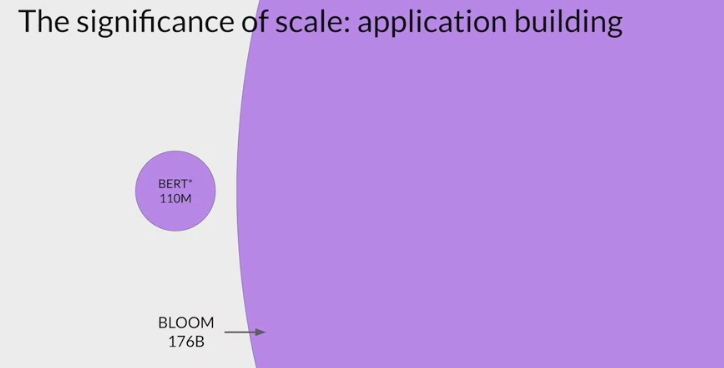

- their relative size in terms of their parameters.

- parameters:

- the model’s

memory. - the more parameters a model has, the more memory, the more

sophisticatedthe tasks it can perform.

- the model’s

- By either using these models as they are or by applying fine tuning techniques to adapt them to the specific use case, you can rapidly

build customized solutions without the need to train a new modelfrom scratch.

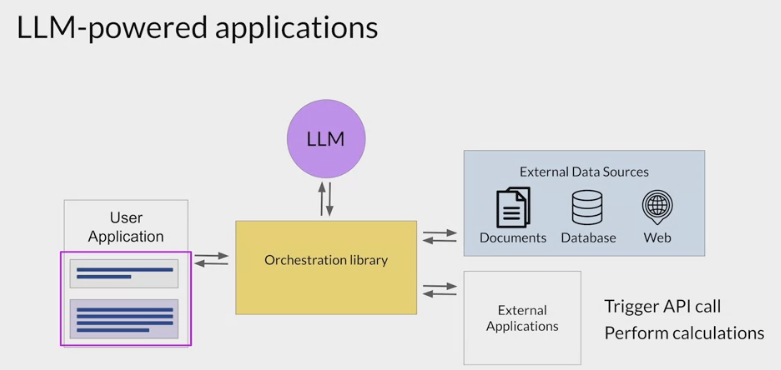

Augmenting LLMs

- connecting LLM to external data sources or using them to invoke external APIs.

- use this ability to provide the model with information it doesn’t know from its pre-training and to enable the model to power interactions with the real-world.

Interact

other machine learning and programming paradigms: write computer code with formalized syntax to interact withlibraries and APIs.large language models: able to take natural language or human written instructions and perform tasks much as a human would.

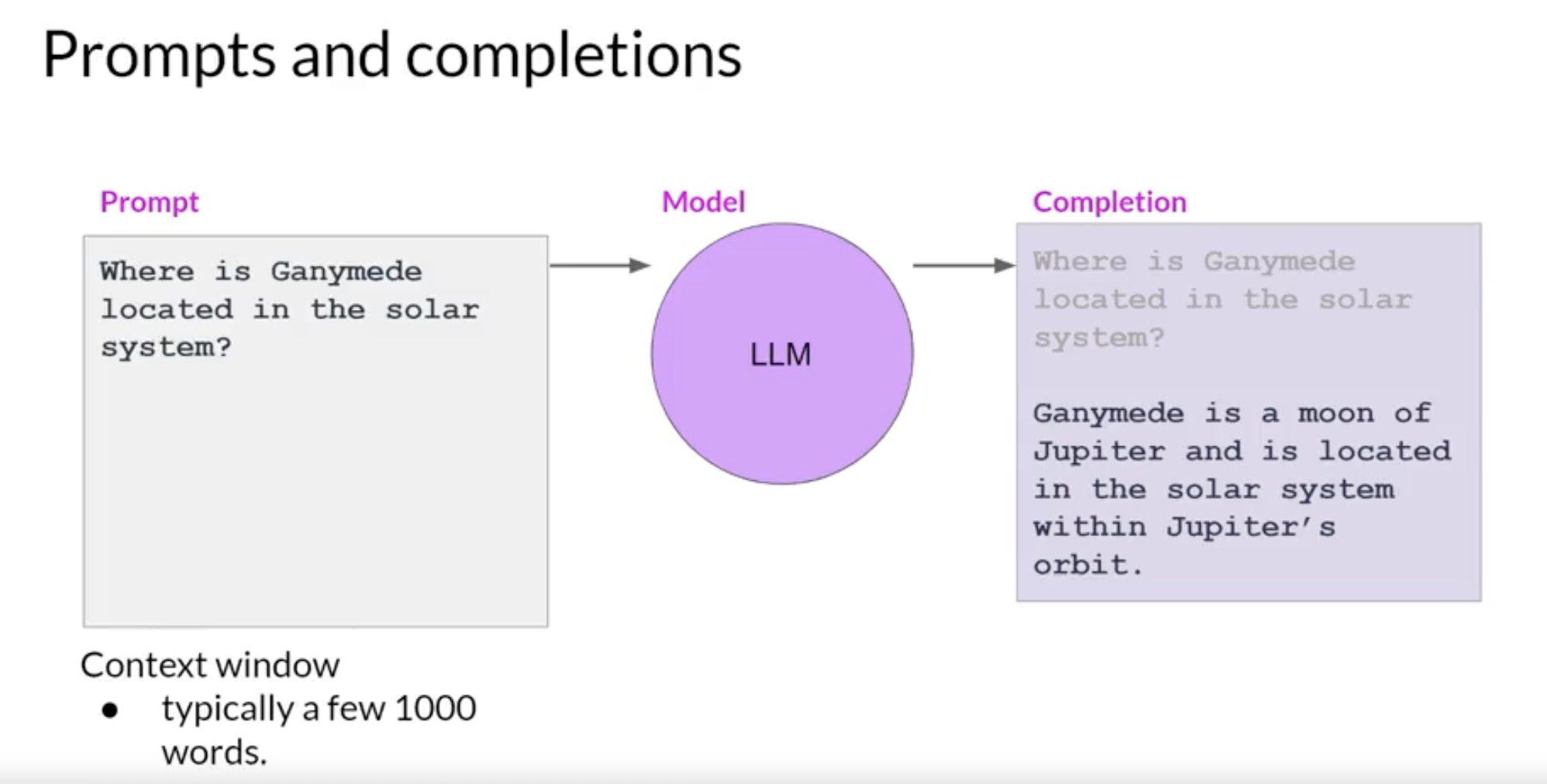

prompt

- The text that you pass to an LLM

The space or memory that is available to the prompt is called the

context window, and this is typically large enough for a few thousand words, but differs from model to model.- example

- ask the model to determine where Ganymede is located in the solar system.

- The prompt is passed to the model, the model then predicts the next words, and because the prompt contained a question, this model generates an answer.

- The output of the model is called a

completion, and the act of using the model to generate text is known asinference. - The completion is comprised of the text contained in the original prompt, followed by the generated text.

GPU

- cloud class instance (from NVIDIA)

- google colab

- kaggle

- amazon sagemaker

- gradient

- microsoft azure

Tesla is graphics cards from NVIDIA for AI

pyTorch

- it does these heavy mathematical computation very easily with libraries

- it got a whole set of APIs and utilities that let you manipulate all of these different tensors

tensors

- a tensor is a computer data object (data structure) that represents numeric data,

- it could be floating point data values or data objects within data objects.

- 1d tensors (column)

- 2d tensors (xy)

- 3d tensors (xyz)

- 4d tensors (cube)

- 5d tensors

- 6d tensors

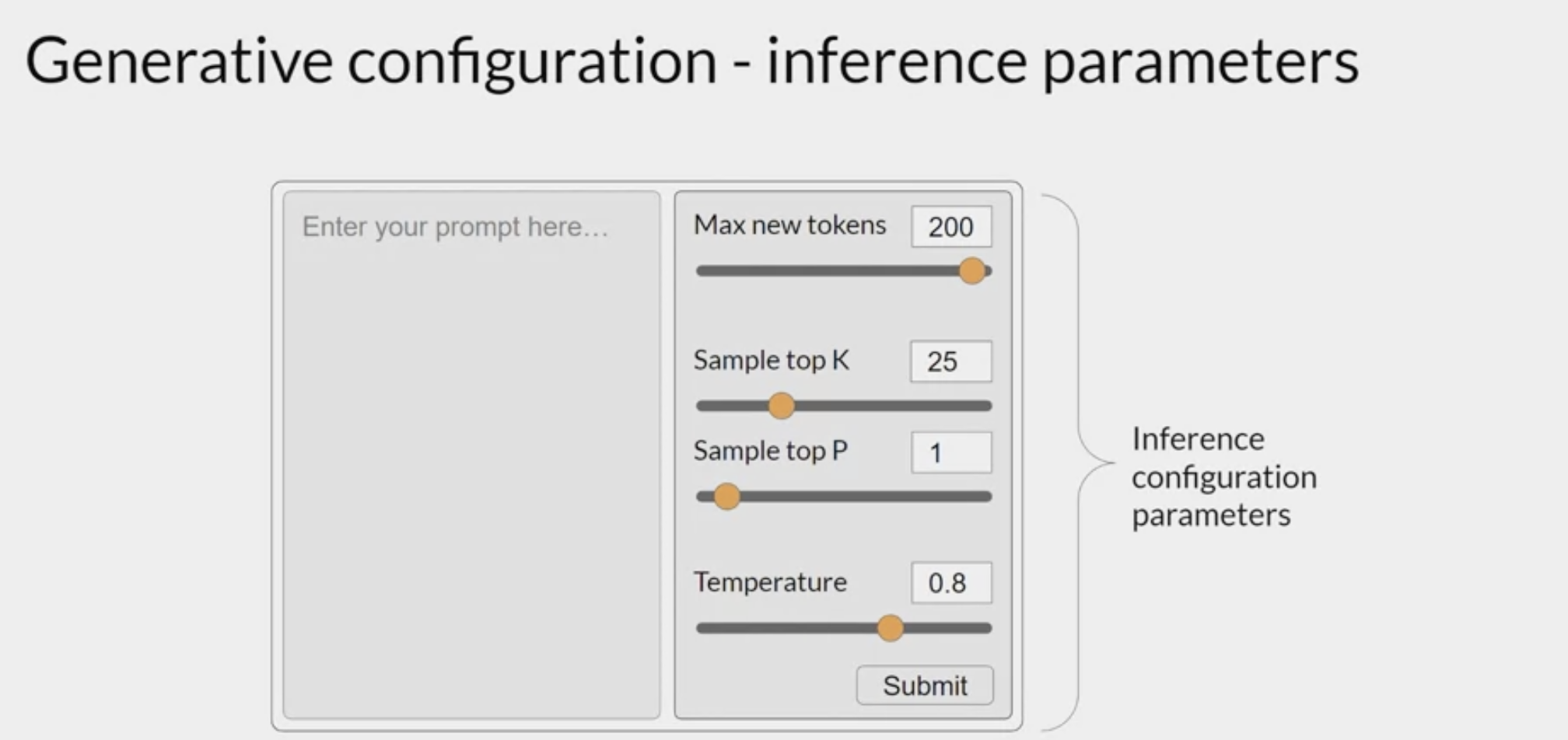

Generative configuration

- Each model exposes a set of configuration parameters that can influence the model’s output during inference.

- training parameters: learned during training time.

- configuration parameters: invoked at inference time and give control over things like the maximum number of tokens in the completion, and how creative the output is.

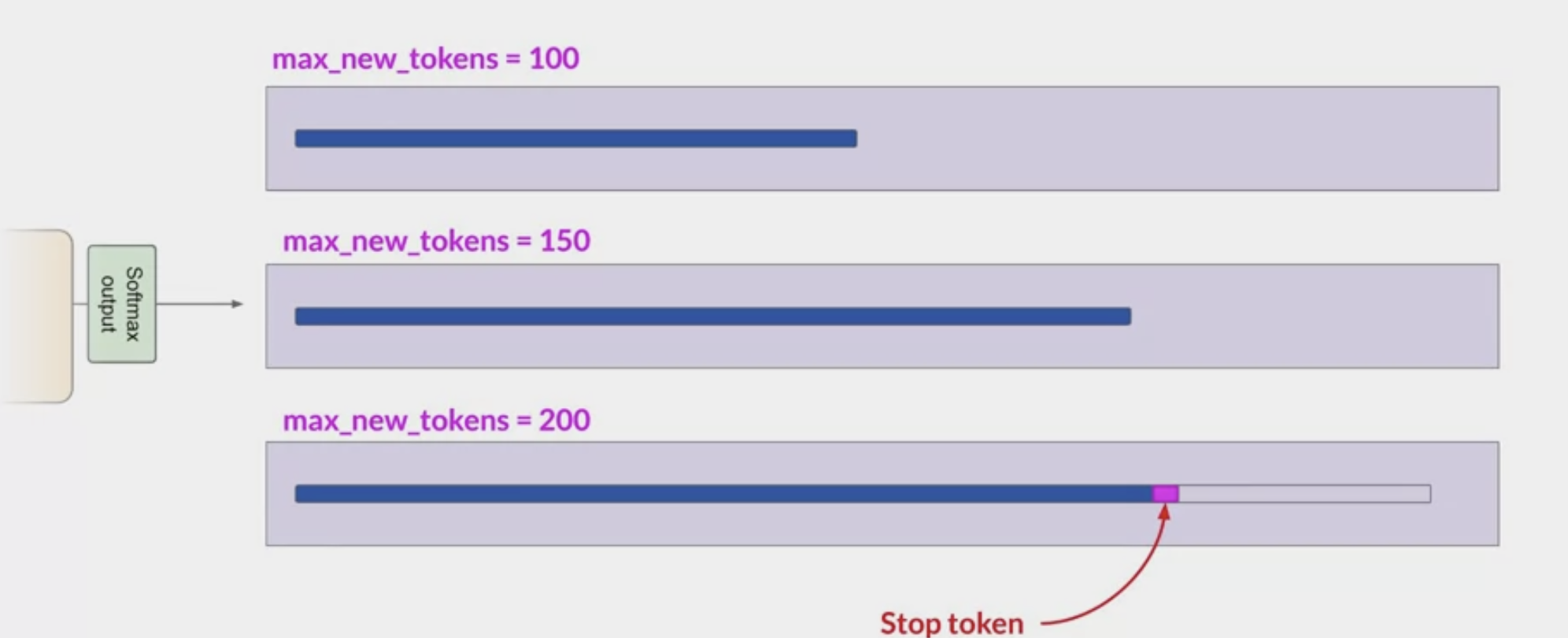

Max new tokens: limit the number of tokens that the model will generate.

- putting a cap on the number of times the model will go through the selection process.

the length of the completion is shorter

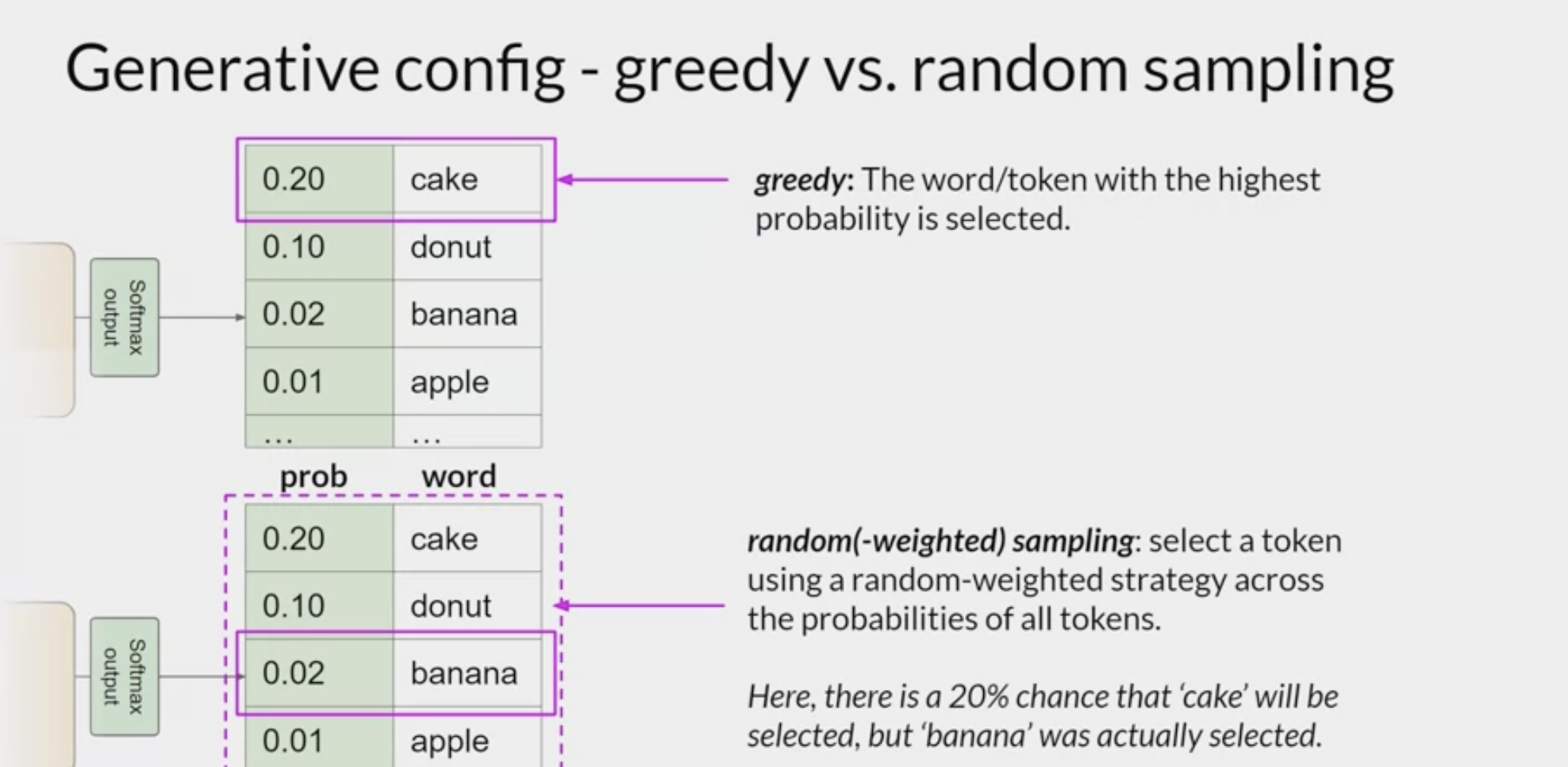

- The output from the

transformer's softmax layeris aprobability distributionacross the entire dictionary of words that the model uses.- Here you can see a selection of words and their probability score next to them.

- this is a list that carries on to the complete dictionary.

controls

to generate text that’s more natural, more creative and avoids repeating words, you need to use some other controls.

- in some implementations, you may need to disable greedy and enable random sampling explicitly.

- For example, the Hugging Face transformers implementation that we use in the lab requires that we set do sample to equal true.

greedy decoding- Most large language models by default will operate with

greedy decoding. - the simplest form of next-word prediction

- the model will always choose the word with the highest probability.

- This method can work very well for short generation but is susceptible to repeated words or repeated sequences of words.

- Most large language models by default will operate with

Random sampling- the easiest way to introduce some variability.

- Instead of selecting the most probable word every time with random sampling, the model

chooses an output word at random using the probability distribution to weight the selection. depending on the setting, there is a possibility that the output may be too creative, producing words that cause the generation to wander off into topics or words that just don’t make sense.

For example, in the illustration, the word banana has a probability score of 0.02. With random sampling, this equates to a 2% chance that this word will be selected. By using this sampling technique, we reduce the likelihood that words will be repeated.

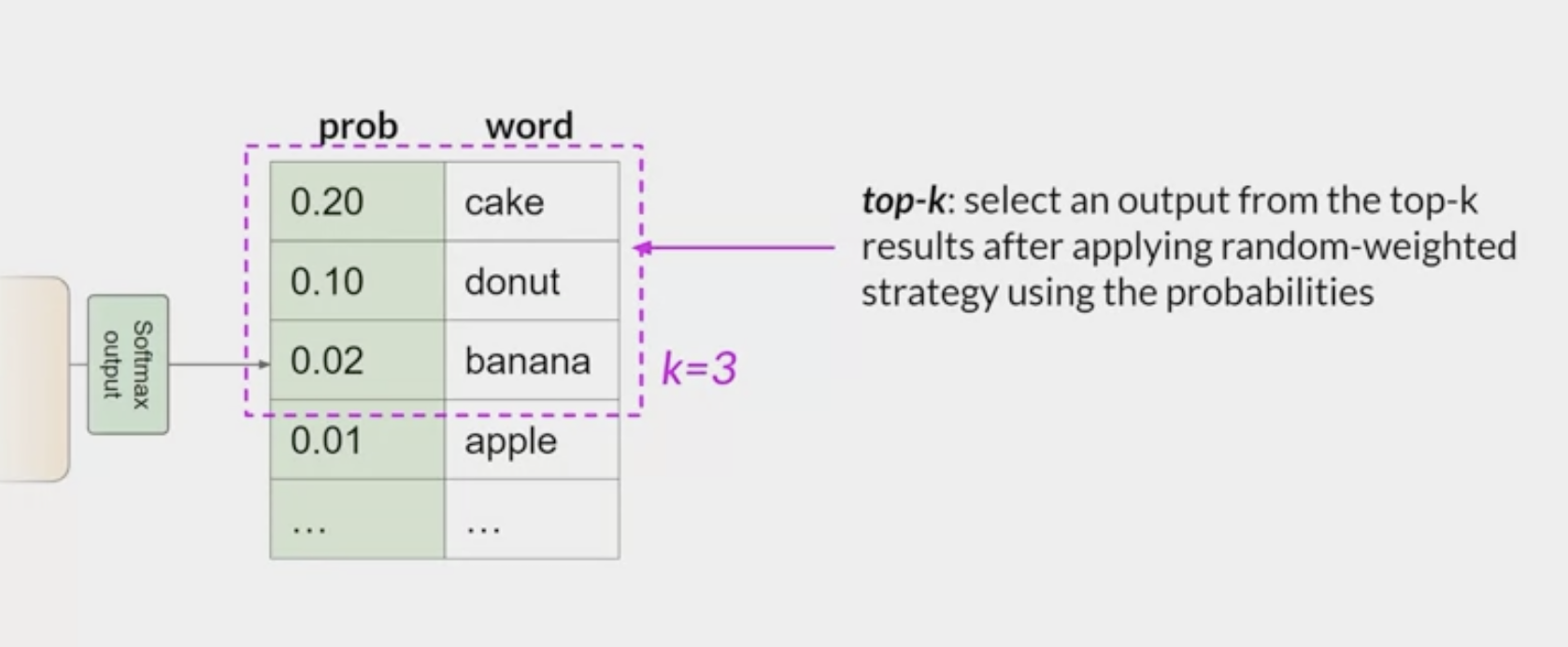

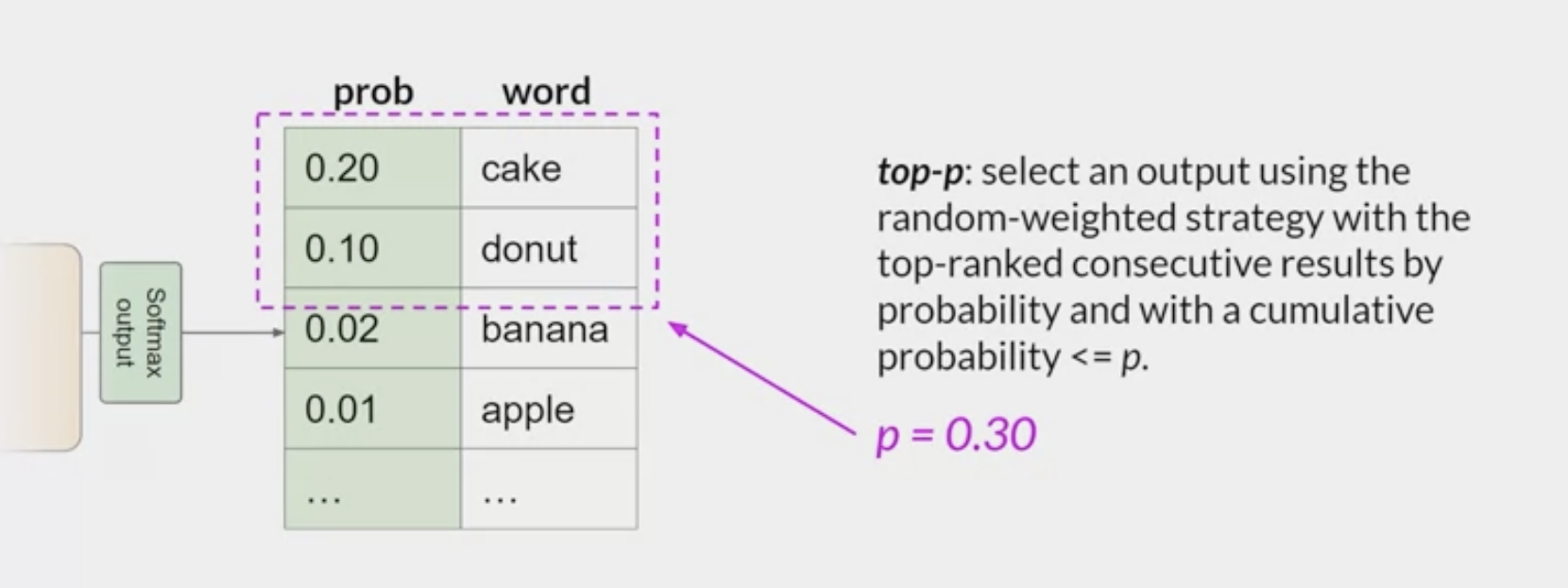

top k, top p sampling techniques

help

limit the random samplingandincrease the chance that the output will be sensible.- With

top k, you specify the number of tokens to randomly choose from - with

top p, you specify the total probability that you want the model to choose from.

- With

top kvalue:- limit the options while still allowing some variability

- instructs the model to choose from only the k tokens with the highest probability.

- In this example here, k is set to three, so you’re restricting the model to choose from these three options. The model then selects from these options using the probability weighting and in this case, it chooses donut as the next word.

- This method can help the model have some randomness while preventing the selection of highly improbable completion words.

- This in turn makes the text generation more likely to sound reasonable and to make sense.

top psetting:- limit the random sampling to the predictions whose combined probabilities do not exceed p.

- For example, if you set p to equal 0.3, the options are cake and donut since their probabilities of 0.2 and 0.1 add up to 0.3. The model then uses the random probability weighting method to choose from these tokens.

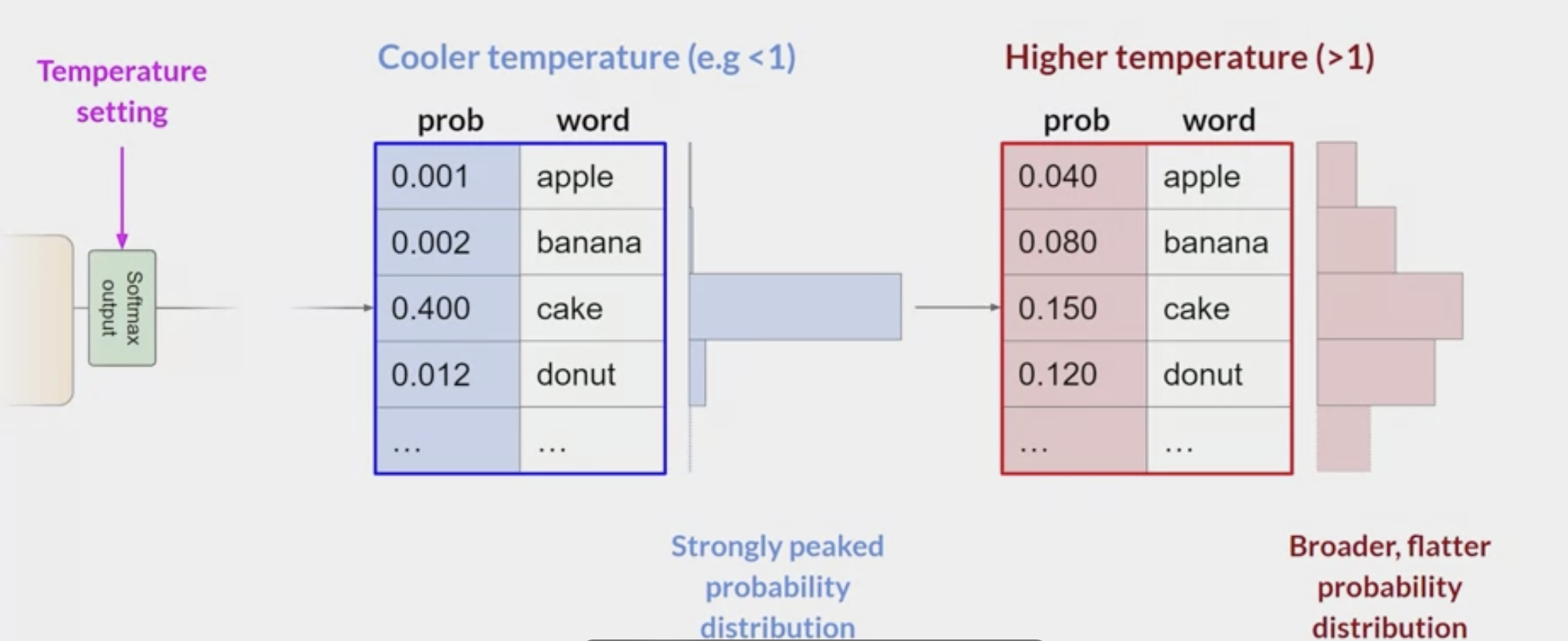

temperature

- control the randomness of the model output

can be adjusted to either increase or decrease randomness within the model output layer (softmax layer)

influences

the shape of the probability distributionthat the model calculates for the next token.- The temperature value is a

scaling factor that's applied within the final softmax layer of the modelthat impactsthe shape of the probability distribution of the next token. - In contrast to the

top k & pparameters, changing the temperature actually alters the predictions that the model will make.

- The temperature value is a

- Broadly speaking, the higher the temperature, the higher the randomness, and the lower the temperature, the lower the randomness.

low value of temperature, say less than one,

- the resulting probability distribution from the softmax layer is more strongly peaked with the probability being concentrated in a smaller number of words.

- blue bars in the table show a probability bar chart turned on its side.

- Most of the probability here is concentrated on the word cake. The model will select from this distribution using random sampling and the resulting text will be less random and will more closely follow the most likely word sequences that the model learned during training.

if set the temperature to a higher value, say, greater than one, then the model will calculate a broader flatter probability distribution for the next token.

- Notice that in contrast to the blue bars, the probability is more evenly spread across the tokens.

- This leads the model to generate text with a

higher degree of randomness and more variabilityin the output compared to acool temperature setting. - This can help you generate text that sounds more creative.

- If leave the temperature value equal to one, this will leave the softmax function as default and the unaltered probability distribution will be used.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

generation_config = GenerationConfig(max_new_tokens=50)

# generation_config = GenerationConfig(max_new_tokens=10)

# generation_config = GenerationConfig(max_new_tokens=50, do_sample=True, temperature=0.1)

# generation_config = GenerationConfig(max_new_tokens=50, do_sample=True, temperature=0.5)

# generation_config = GenerationConfig(max_new_tokens=50, do_sample=True, temperature=1.0)

inputs = tokenizer(few_shot_prompt, return_tensors='pt')

output = tokenizer.decode(

model.generate(

inputs["input_ids"],

generation_config=generation_config,

)[0],

skip_special_tokens=True

)

print(dash_line)

print(f'MODEL GENERATION - FEW SHOT:\n{output}')

print(dash_line)

print(f'BASELINE HUMAN SUMMARY:\n{summary}\n')

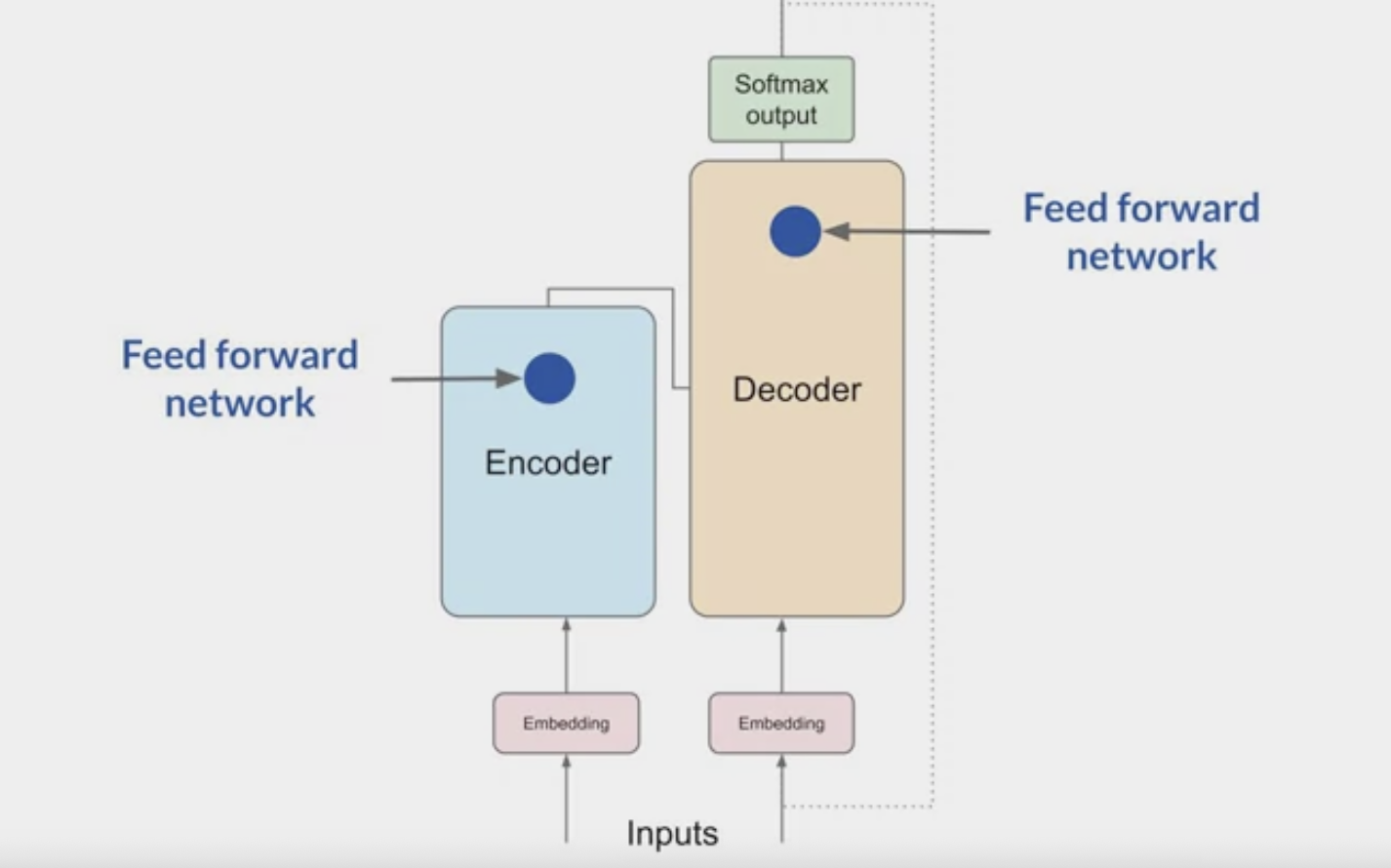

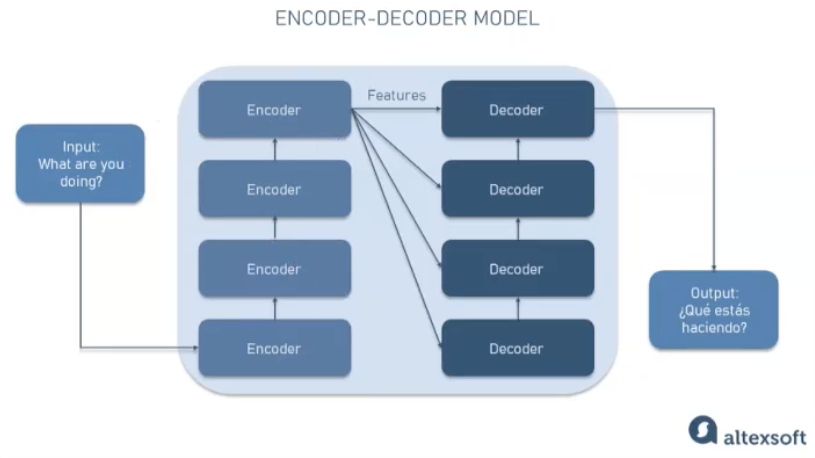

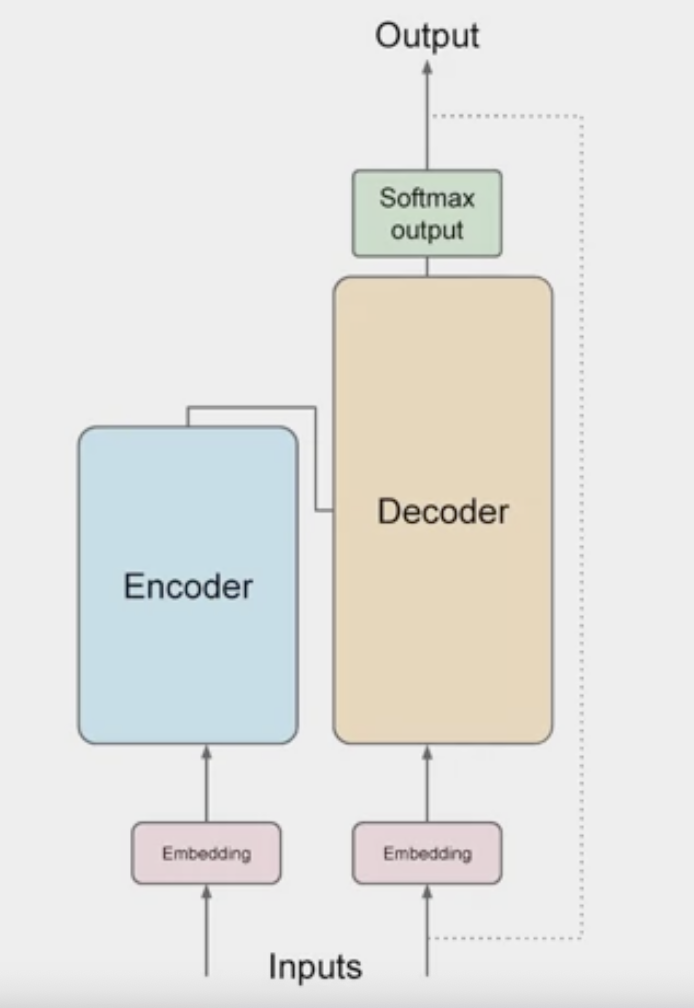

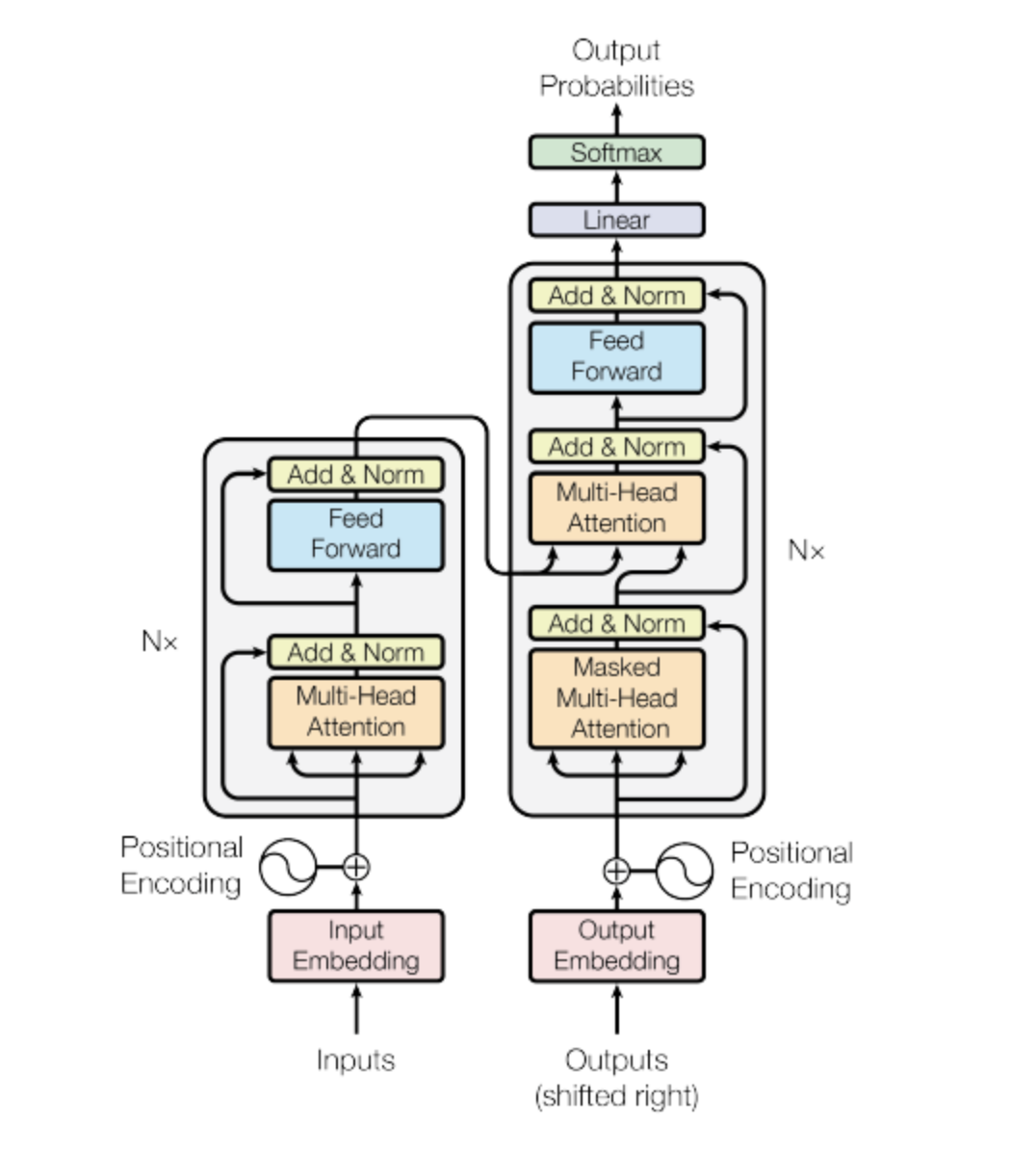

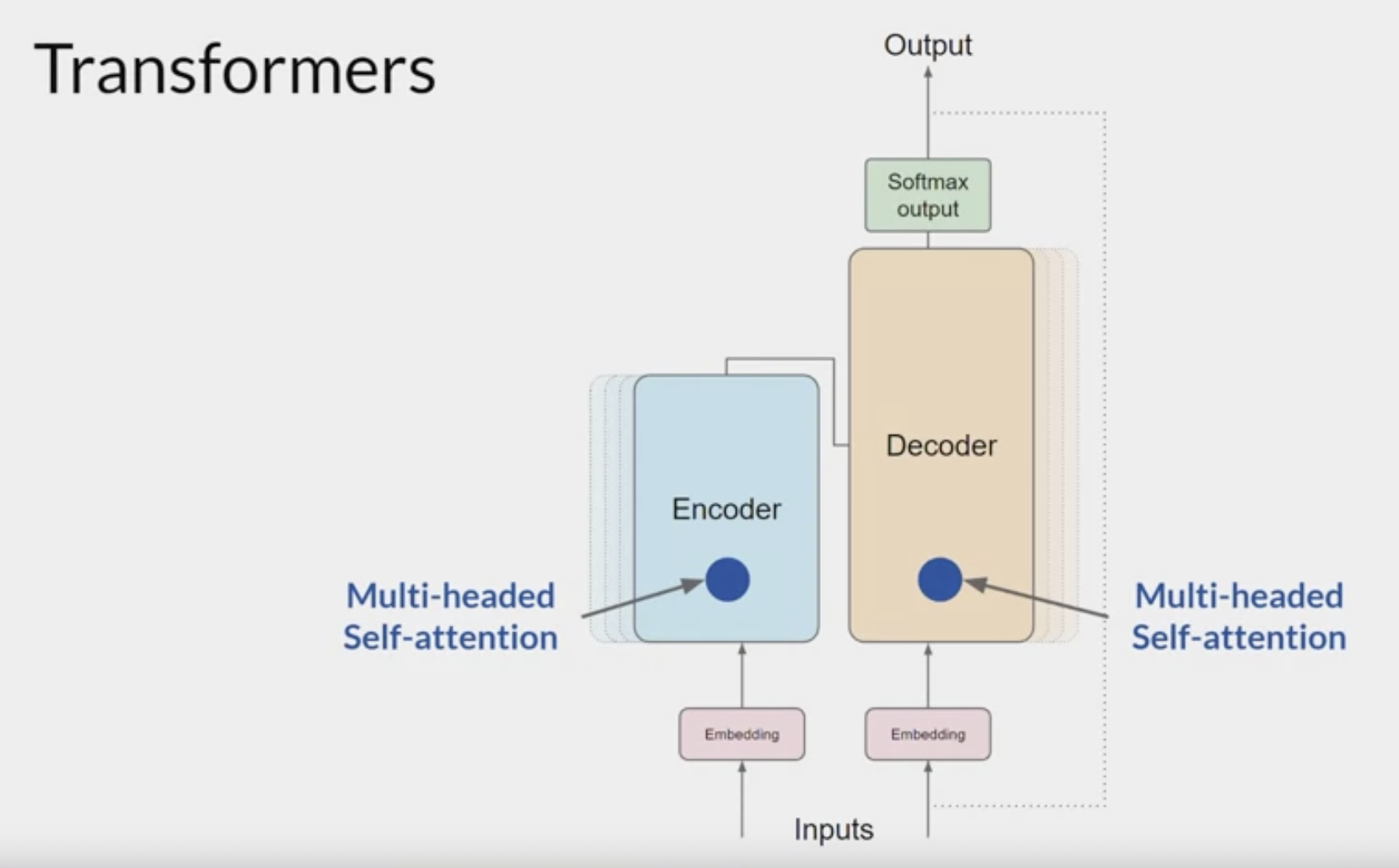

Encoder & Decoder

The transformer architecture is split into two distinct parts

- the encoder and the decoder.

- These components work in conjunction with each other and they share a number of similarities.

The encoder

encodes input sequences into a deep representation of the structure and meaning of the input.The decoder, working from input token triggers,

uses the encoder's contextual understanding to generate new tokens.It does this in a loop until some stop condition has been reached.

the inputs to the model are at the bottom and the outputs are at the top

Encoder vs Decoder

- Autoencoder models

- referred to as a Masked Language Model (MLM),

- the model is trained to predict masked tokens (tokens that have been intentionally masked out) in a sequence.

- This approach helps the model learn bidirectional relationships between tokens in the input sequence.

- Autoregressive models

- use causal language modeling with the objective to guess the next token based on the previous sequence of tokens.

- focus on predicting the next token or word making them best suited for text generation tasks.

- sequence-to-sequence (Seq2Seq) models

- These models can take an input sequence (e.g., a sentence in one language) and generate an output sequence (e.g., the translated sentence in another language) in a parallel and efficient manner .

- They achieve this by employing an encoder-decoder architecture with attention mechanisms, which allows them to handle variable-length input and output sequences effectively.

- well-suited to the task of text translation

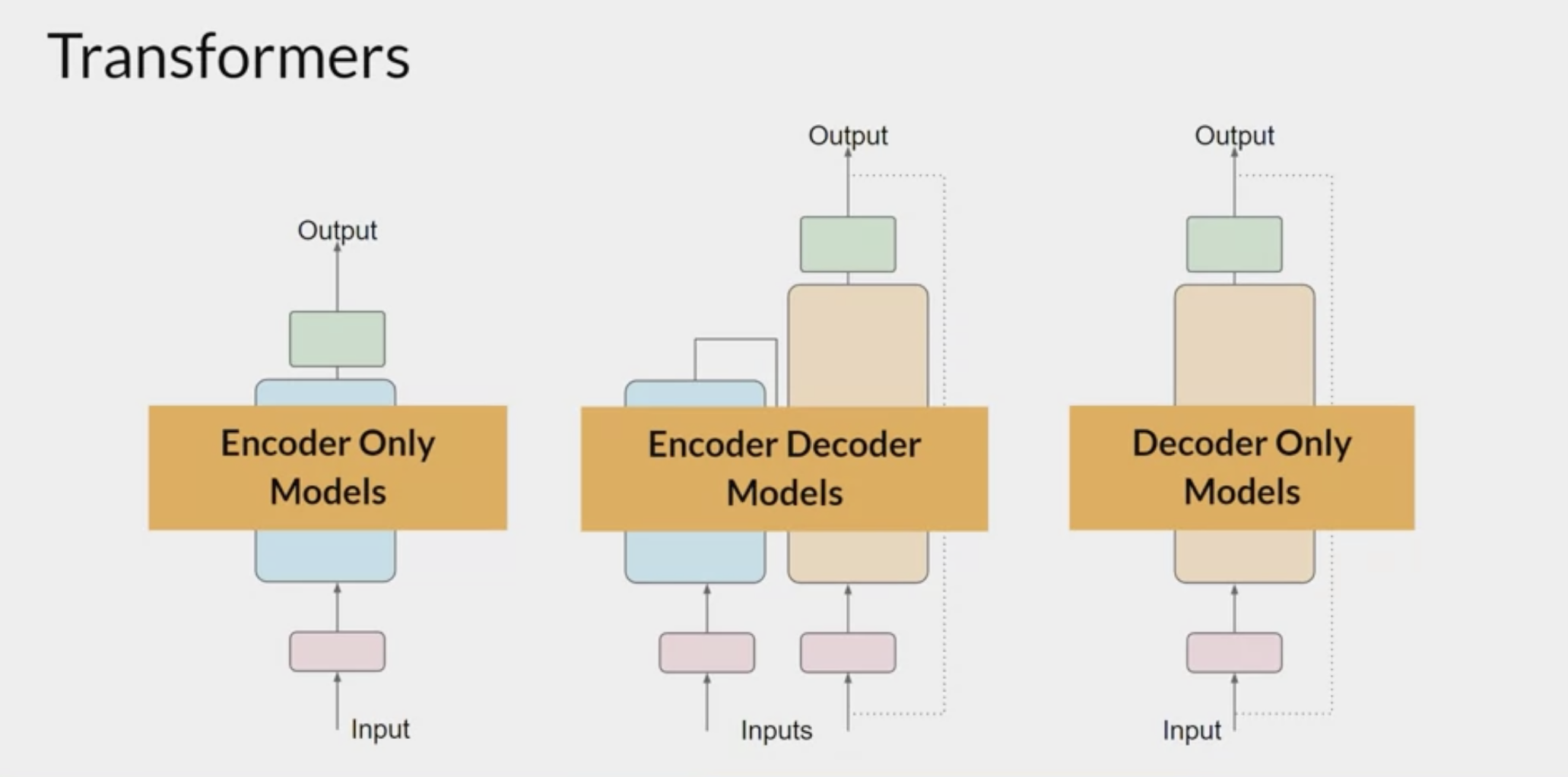

Encoder-only models

- also work as sequence-to-sequence (Seq2Seq) models

- particularly those based on transformers, are widely used for tasks such as text translation.

- without further modification, the input sequence and the output sequence or the same length, less common these days,

- by adding additional layers to the architecture, you can train encoder-only models to perform

classification taskssuch as sentiment analysis, - encoder-only model example: BERT

Encoder-decoder models

- perform well on sequence-to-sequence tasks such as

translation - the input sequence and the output sequence can be different lengths.

- You can also scale and train this type of model to perform

general text generation tasks. - encoder-decoder models examples: BART, T5

- perform well on sequence-to-sequence tasks such as

- decoder-only models

- the most commonly used today.

- as they have scaled, their capabilities have grown. These models can now generalize to most tasks.

- Popular decoder-only models: GPT family of models, BLOOM, Jurassic, LLaMA, and many more.

LLM 为什么都用 Decoder only 架构?[^LLM为什么都用Decoderonly架构]

- 训练效率和工程实现上的优势

- 在理论上是因为 Encoder 的双向注意力会存在低秩问题,这可能会削弱模型表达能力;

- 另一方面,就生成任务而言,引入双向注意力并无实质好处。

- 而 Encoder-Decoder 架构之所以能够在某些场景下表现更好,大概只是因为它多了一倍参数。

- 所以,在同等参数量, 同等推理成本下,Decoder-only 架构就是最优选择了。

- 训练效率: Decoder-only 架构只需要进行单向的自回归预测,而 Encoder-Decoder 架构需要进行双向的自编码预测和单向的自回归预测,计算量更大;

- 工程实现: Decoder-only 架构只需要一个模块,而 Encoder-Decoder 架构需要两个模块,并且需要处理两者之间的信息传递和对齐,实现起来更复杂;

- 理论分析: Encoder 的双向注意力会存在低秩问题,即注意力矩阵的秩随着网络深度的增加而降低2,结论是如果没有残差连接和 MLP 兜着,注意力矩阵会朝秩为 1 的矩阵收敛,最后每个 token 的表示都一样了,网络就废了,这可能会削弱模型的表达能力。而 Decoder 的单向注意力则不存在这个问题;

- 生成任务: 对于文本生成任务,Encoder 的双向注意力并无实质好处,因为它会引入右侧的信息,破坏了自回归的假设。而 Decoder 的单向注意力则可以保持自回归的一致性。

How the model works

machine-learning models are just big statistical calculators and they work with numbers, not words.

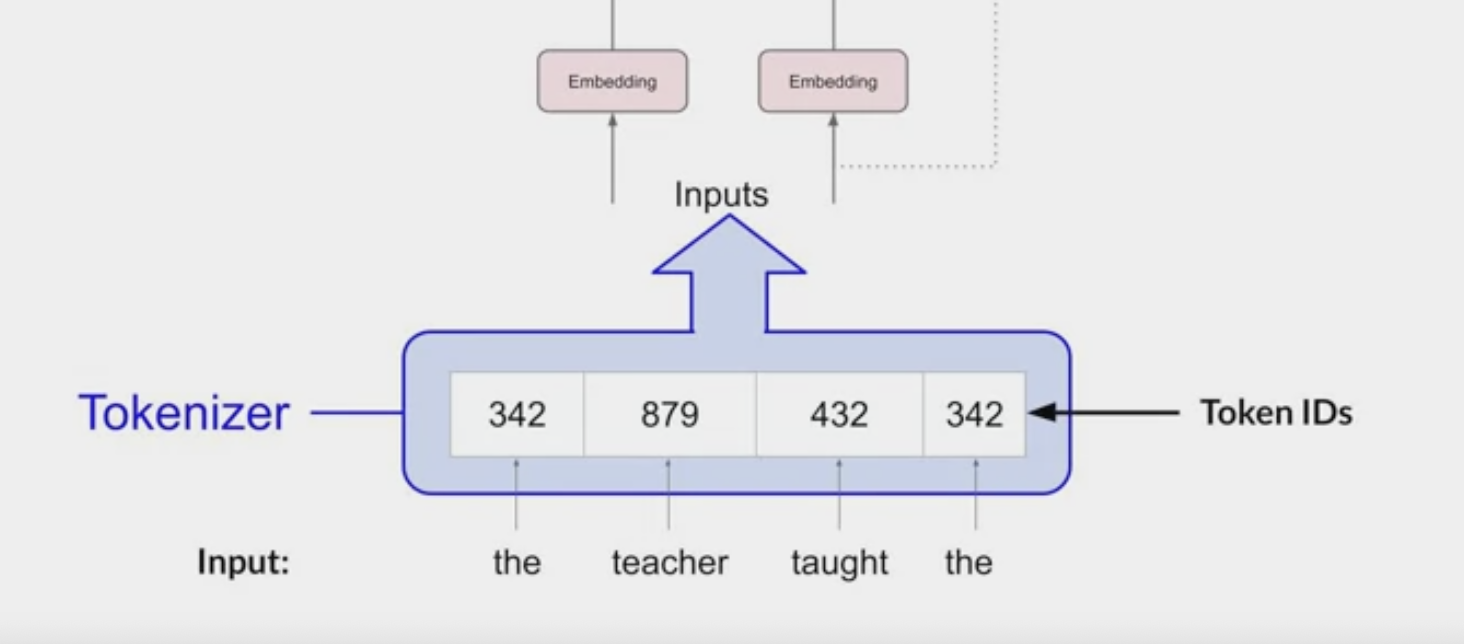

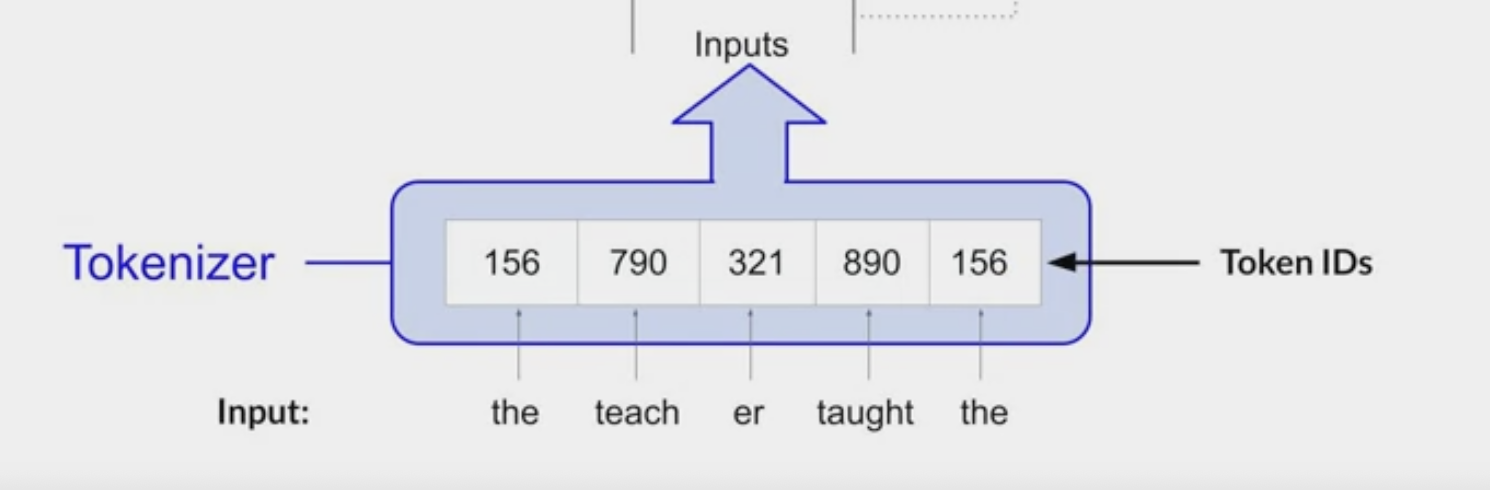

Tokenize:

- Before passing texts into the model to process, must first tokenize the words.

- converts the words into numbers

each number representing a position in a dictionary of all the possible words that the model can work with.

You can choose from multiple tokenization methods. For example,

- once you’ve selected a tokenizer to train the model, you must use the same tokenizer when you generate text.

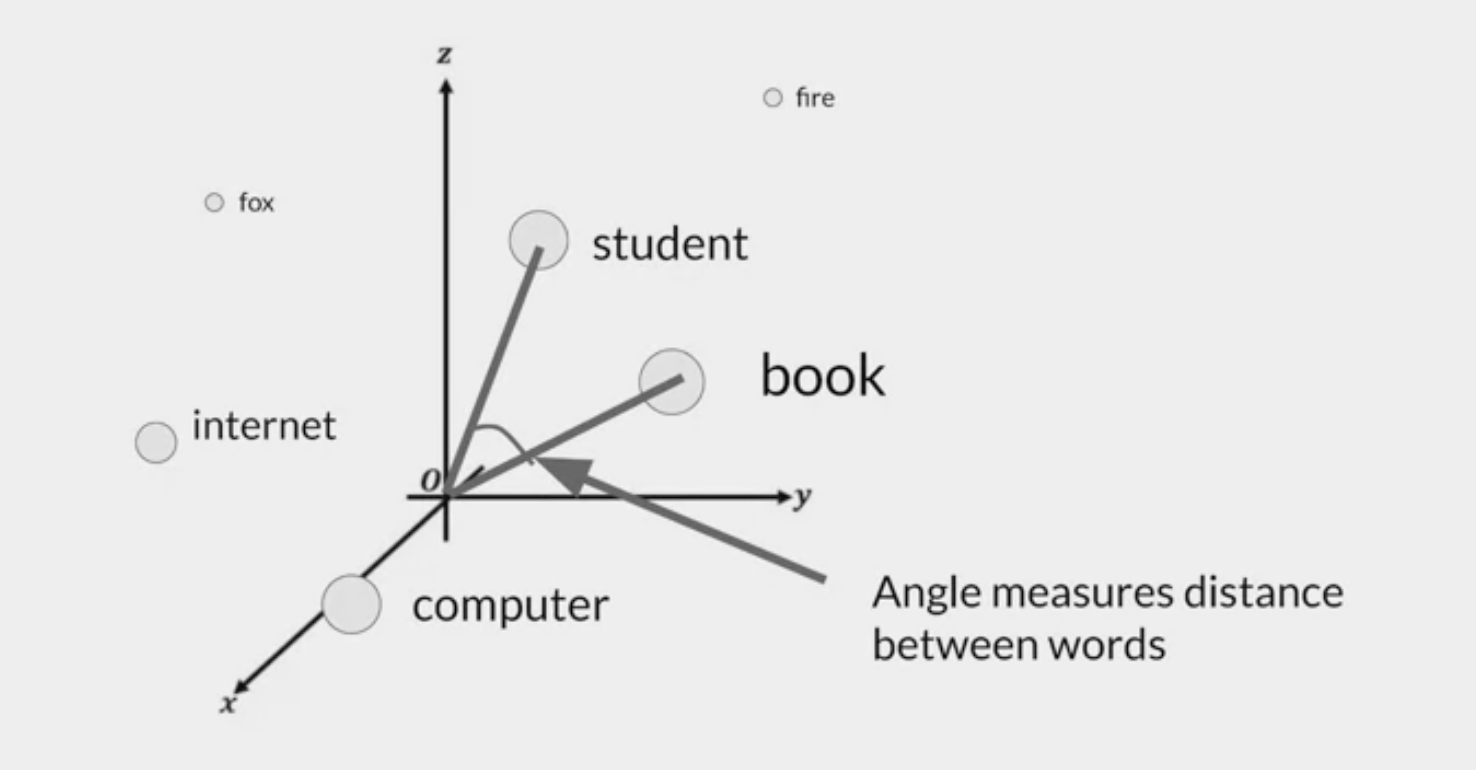

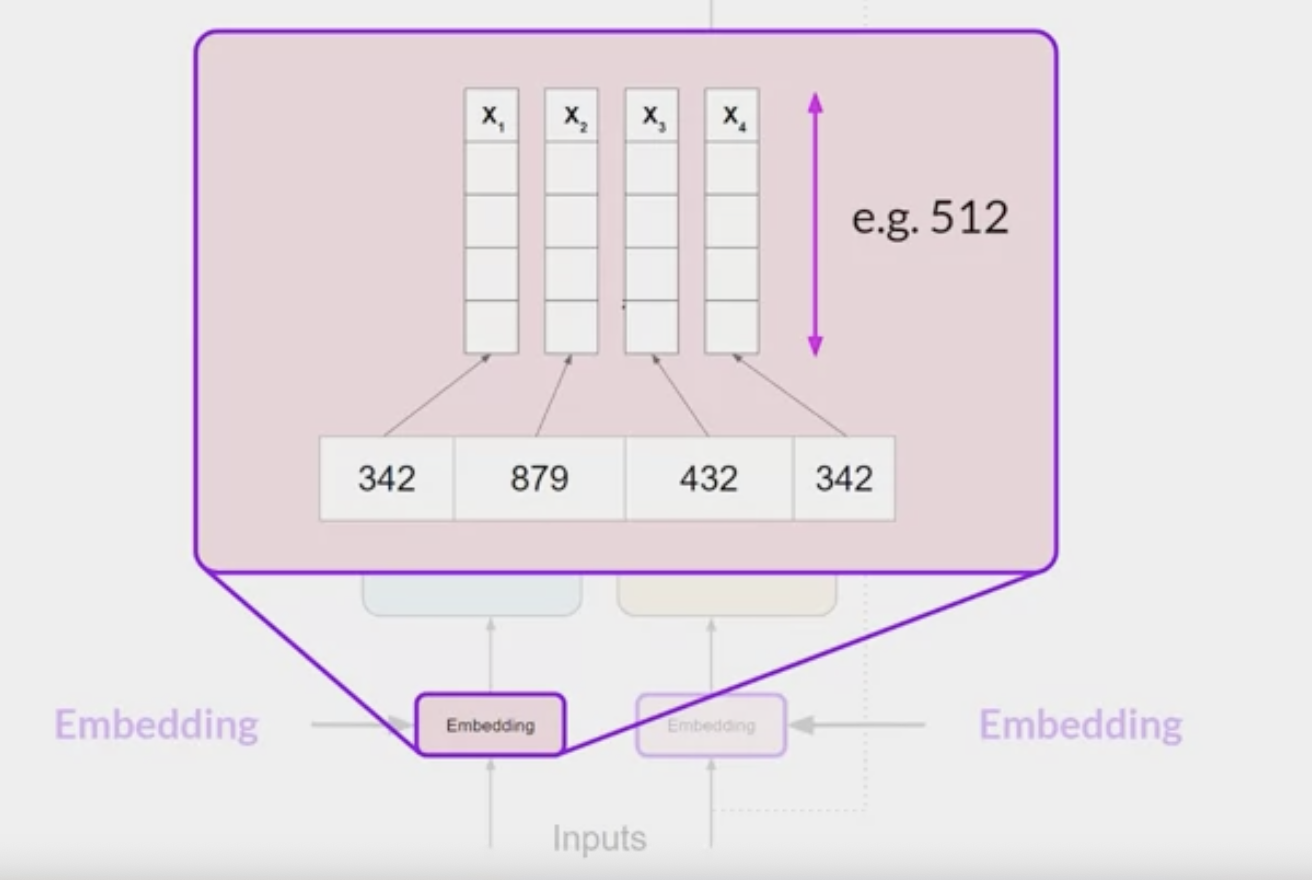

Token Embedding:

- Now that the input is represented as numbers, pass it to the embedding layer.

- This layer is a

trainable vector embedding space, - high-dimensional space where each token is represented as a vector and occupies a unique location within that space.

Each token ID in the vocabulary is matched to a

multi-dimensional vector, and the intuition is that these vectors learn toencode the meaning and context of individual tokens in the input sequence.Embedding vector spaces have been used in natural language processing for some time, previous generation language algorithms like Word2vec use this concept.

each word has been matched to a token ID, and each token is mapped into a vector.

- In the original transformer paper, the vector size was actually 512

- For simplicity

- imagine a vector size of just three, you could plot the words into a three-dimensional space and see the relationships between those words.

- relate words that are located close to each other in the embedding space, and calculate the distance between the words as an angle, which gives the model the ability to mathematically understand language.

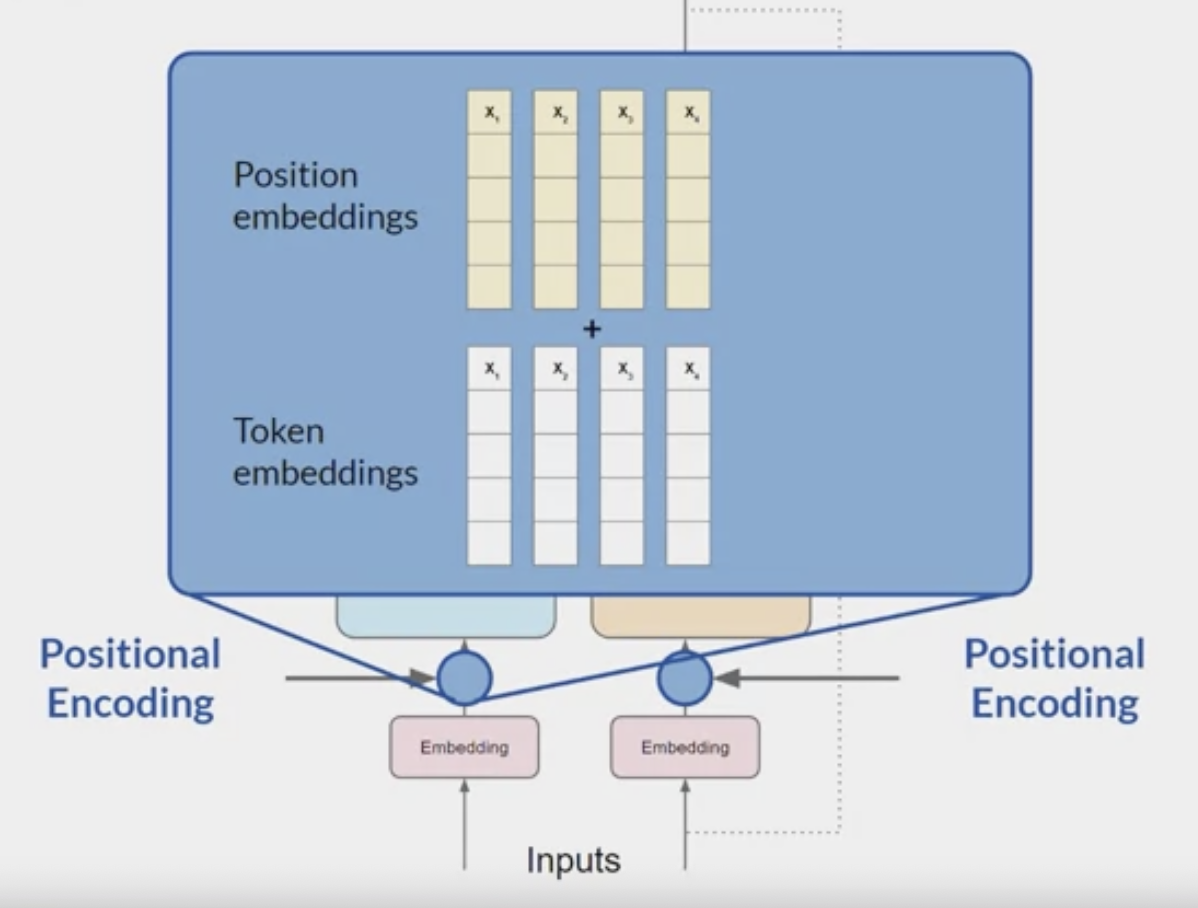

Positional Encoding:

Self-attention

A mechanism that allows a model to focus on

different partsof the input sequence during computation.enables the transformer to weigh the importance of different tokens in the input sequence when processing each token

- allow the model to capture dependencies and relationships between tokens regardless of their distance in the sequence

sum the

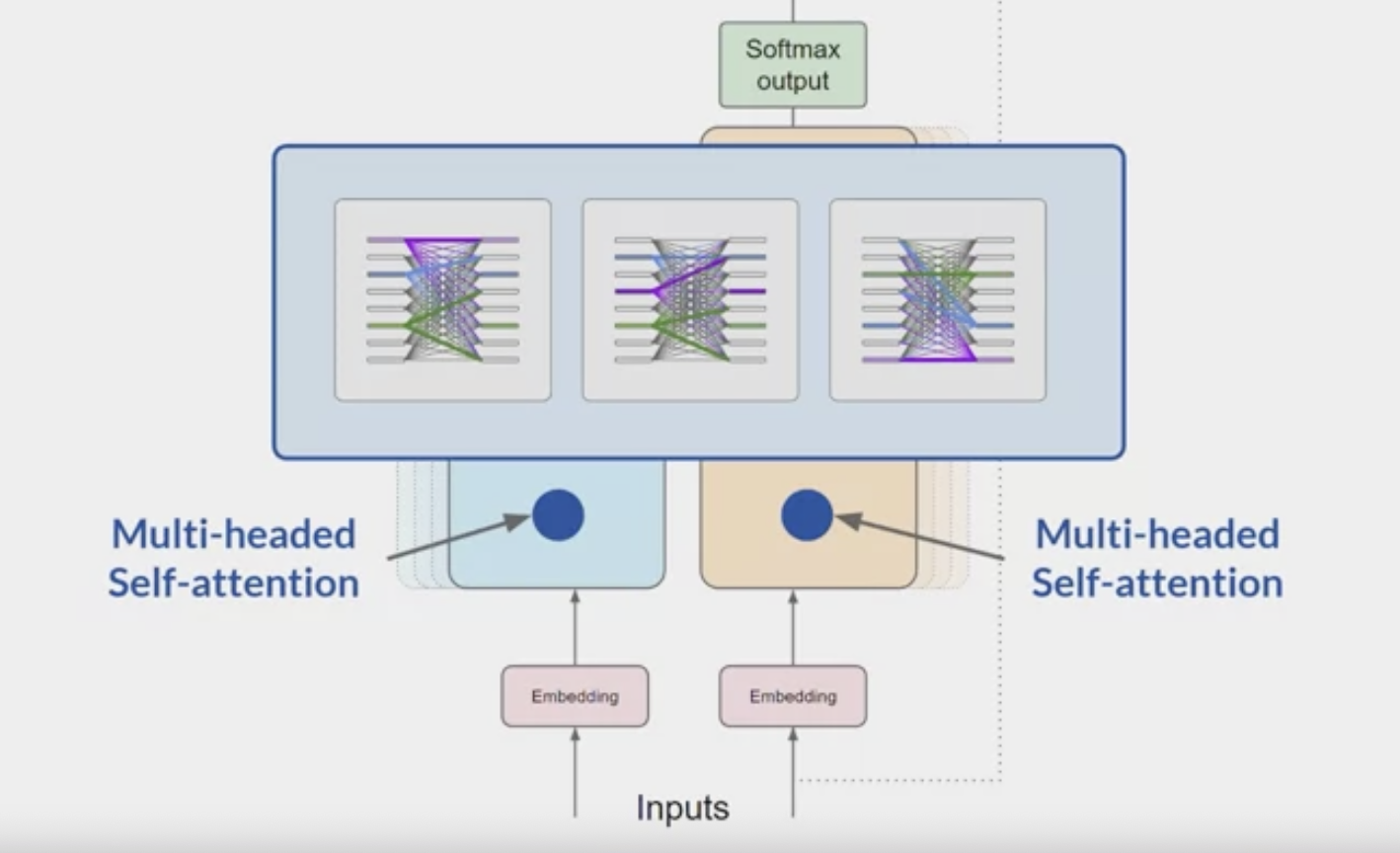

input tokensand thepositional encodings, pass the resulting vectors to the self-attention layer.the model analyzes the relationships between the tokens in the input sequence, it allows the model to attend to different parts of the input sequence to better capture the contextual dependencies between the words.

The

self-attention weightsthat are learned during training and stored in these layers reflectthe importance of each word in that input sequence to all other words in the sequence.

- But this does not happen just once, the transformer architecture actually has

multi-headed self-attention. This means that

multiple sets of self-attention weights or headsare learned in parallel independently of each other.The number of attention heads included in the attention layer varies from model to model, but numbers in the range of 12-100 are common.

each self-attention head will learn a different aspect of language. For example,

don’t dictate ahead of time what aspects of language the attention heads will learn.

- The weights of each head are randomly initialized and given sufficient training data and time,

- each will learn different aspects of language.

- While some attention maps are easy to interpret, like the examples discussed here, others may not be.

- Now that all of the attention weights have been applied to the input data, the output is processed through a

fully-connected feed-forward network.

- The output of this layer is a vector of logits proportional to the probability score for each and every token in the tokenizer dictionary

Softmax

- then pass these logits to a final softmax layer, where they are normalized into a

probability score for each word. - This output includes a probability for every single word in the vocabulary, so there’s likely to be thousands of scores here.

- One single token will have a score higher than the rest.

- This is the most likely predicted token.

there are a number of methods that you can use to vary the final selection from this vector of probabilities.

- then pass these logits to a final softmax layer, where they are normalized into a

Overview prediction process

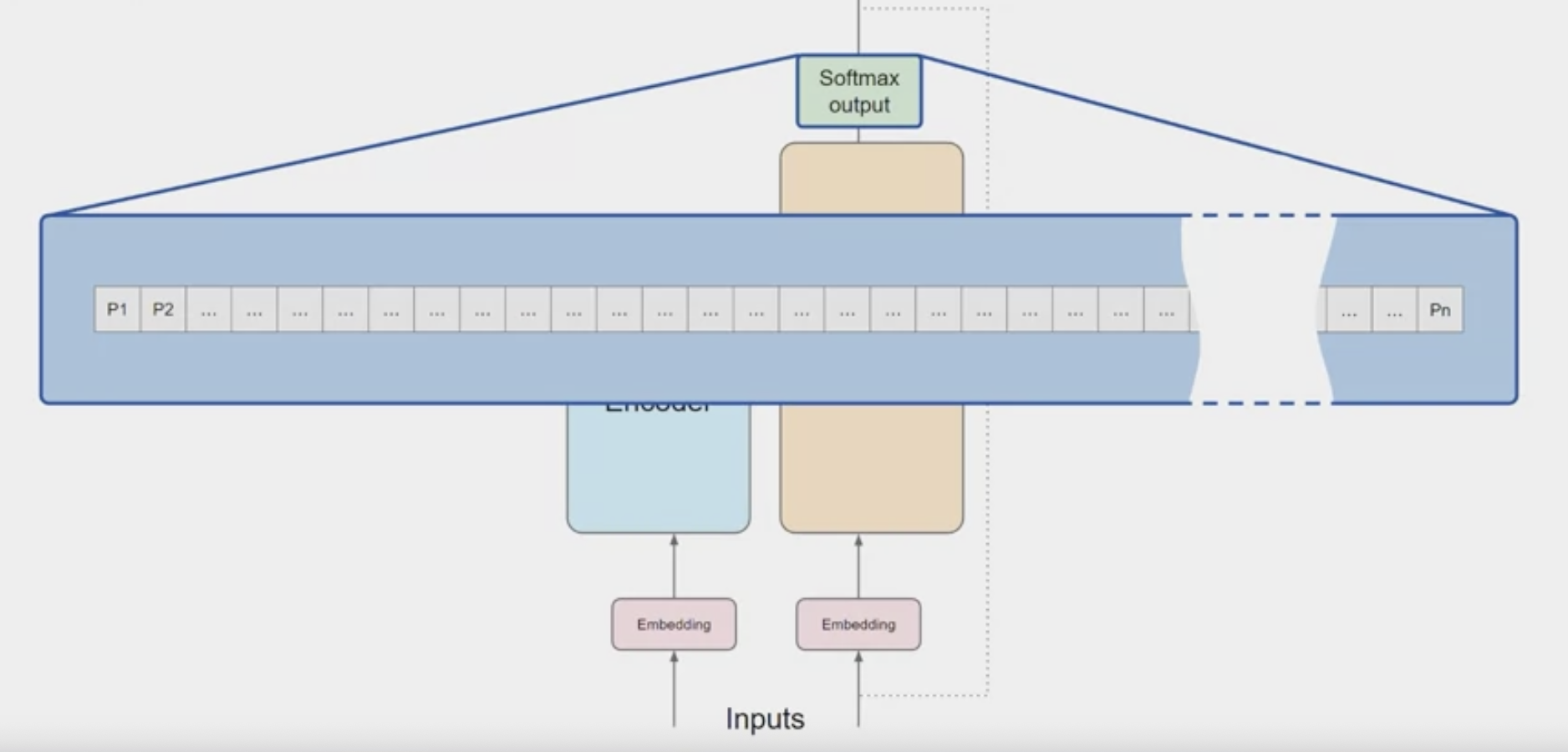

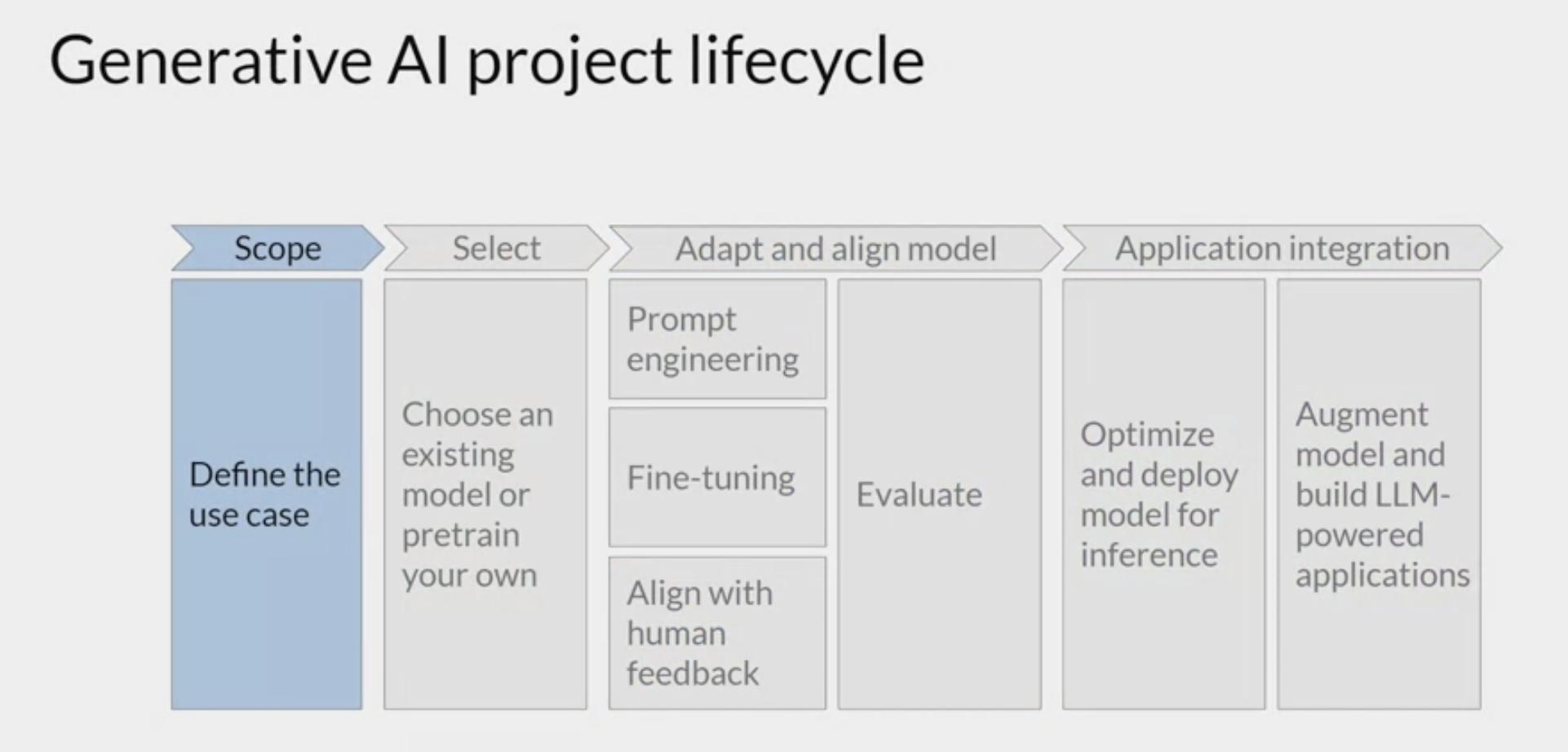

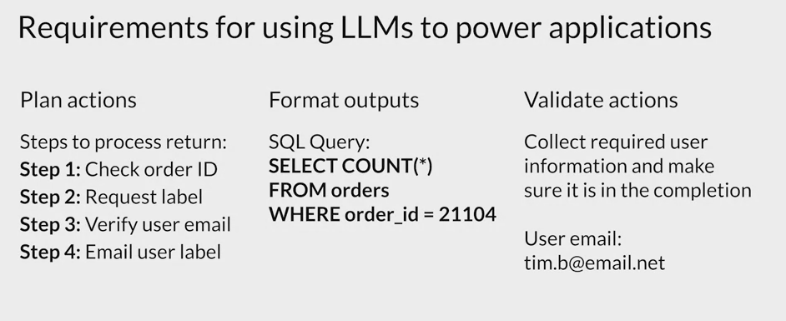

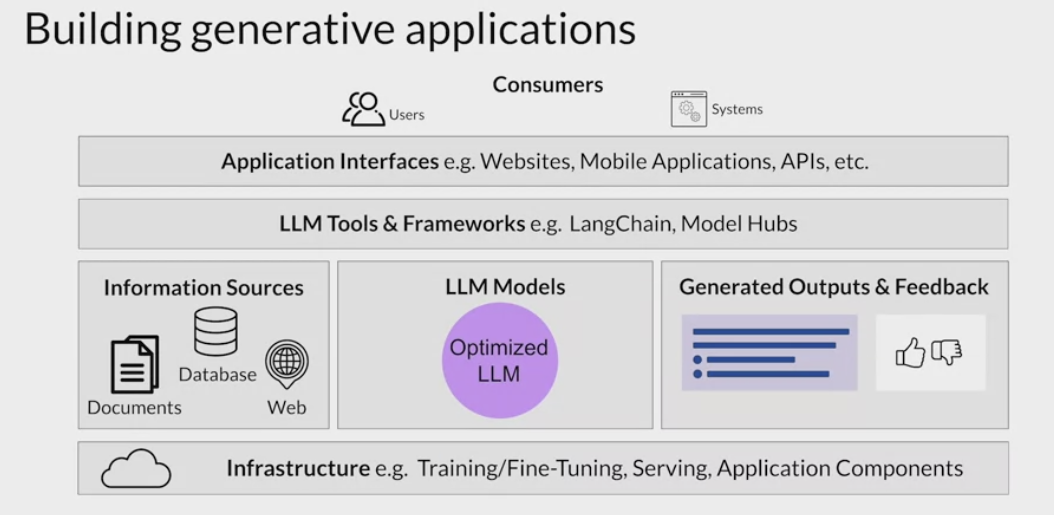

At a very high level, the workflow can be divided into three stages:

Data preprocessing / embedding:

- This stage involves storing private data to be retrieved later.

- Typically, the documents are broken into chunks, passed through an embedding model, then stored in a specialized database called a vector database.

Prompt construction / retrieval:

- When a user submits a query, the application constructs a series of prompts to submit to the language model.

- A compiled prompt typically combines

- a prompt template hard-coded by the developer;

- examples of valid outputs called few-shot examples;

- any necessary information retrieved from external APIs;

- and a set of relevant documents retrieved from the vector database.

Prompt execution / inference:

- Once the prompts have been compiled, they are submitted to a pre-trained LLM for inference—including both proprietary model APIs and open-source or self-trained models.

- Some developers also add operational systems like logging, caching, and validation at this stage.

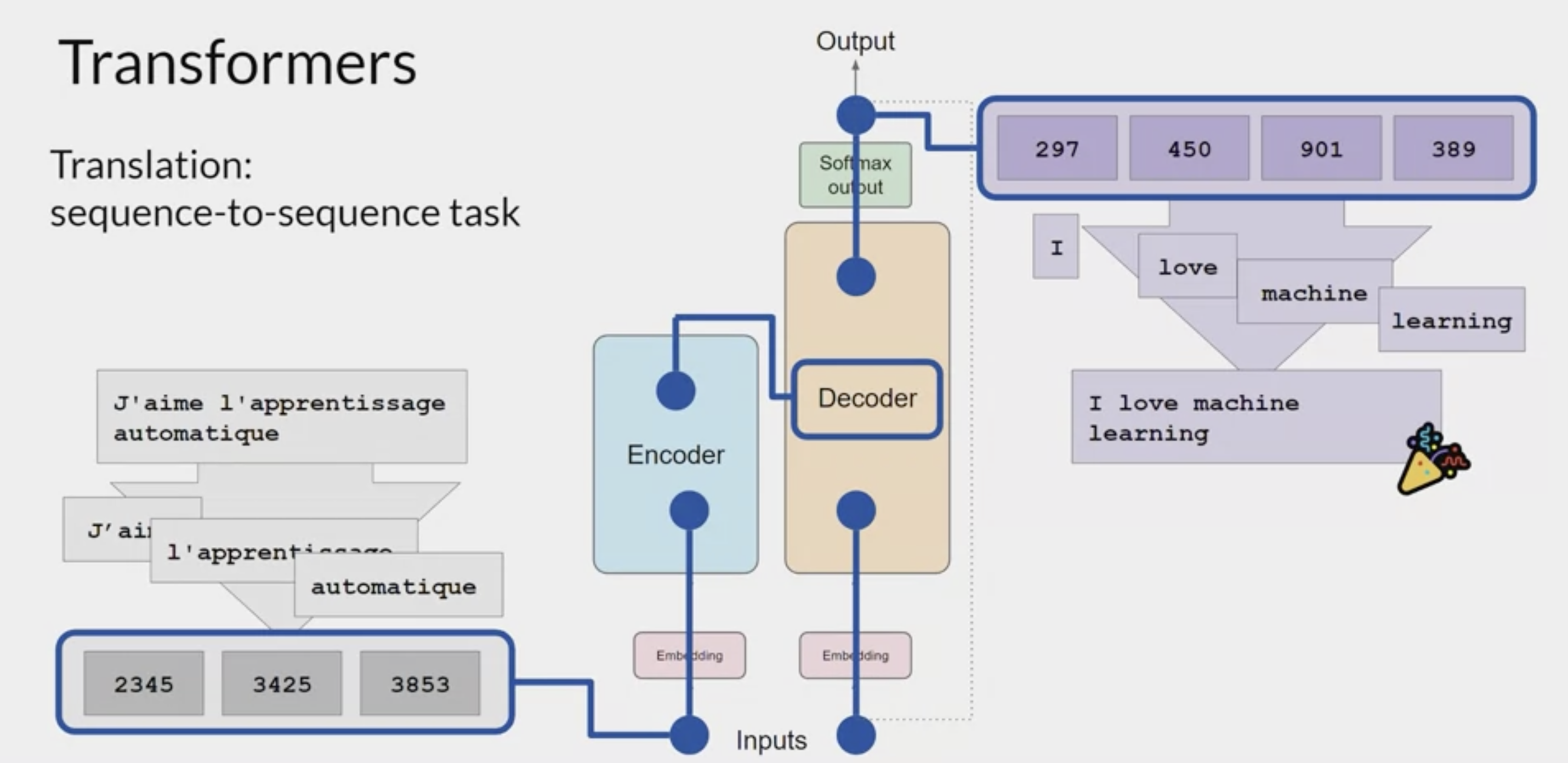

example: Generating text with transformers

- translation task

- a sequence-to-sequence task: the original objective of the transformer architecture designers.

- use a transformer model to translate the French phrase

[FOREIGN]into English.

Encoded side:

- First,

tokenize the input wordsusing this same tokenizer that was used to train the network. - These tokens are then added into the input on the encoder side of the network, passed through the embedding layer, and then fed into the

multi-headed attention layers. The outputs of the multi-headed attention layers are fed through a

feed-forward networkto the output of the encoder.- At this point, the data that leaves the encoder is a deep representation of the structure and meaning of the input sequence.

Decoded side:

- This representation is inserted into the middle of the decoder to influence the

decoder's self-attention mechanisms. - Next, a

start of sequence tokenis added to the input of the decoder. - This

triggers the decoder to predict the next token, based on the contextual understanding that it’s being provided from the encoder. The output of the decoder’s self-attention layers gets passed through the

decoder feed-forward networkand through a finalsoftmax output layer.At this point, we have our first token.

You’ll continue this loop, passing the output token back to the input to trigger the generation of the next token, until the model predicts an end-of-sequence token.

At this point, the final sequence of tokens can be detokenized into words, and you have the output.

- There are multiple ways in which you can use the output from the softmax layer to predict the next token. These can influence how creative you are generated text is.

Data preprocessing / embedding

Contextual data input

- Contextual data for LLM apps includes text documents, PDFs, and even structured formats like CSV or SQL tables.

- Data-loading and transformation solutions for this data vary widely across developers.

- Most use traditional ETL tools like

DatabricksorAirflow. - Some also use

document loadersbuilt into orchestration frameworks likeLangChain(powered by Unstructured) andLlamaIndex(powered by Llama Hub).

- Most use traditional ETL tools like

embeddings,

- most developers use the

OpenAI API, specifically with the text-embedding-ada-002 model. It’s easy to use (especially if you’re already already using other OpenAI APIs), gives reasonably good results, and is becoming increasingly cheap. - Some larger enterprises are also exploring

Cohere, which focuses their product efforts more narrowly on embeddings and has better performance in certain scenarios. - For developers who prefer open-source, the

Sentence Transformers libraryfromHugging Faceis a standard.

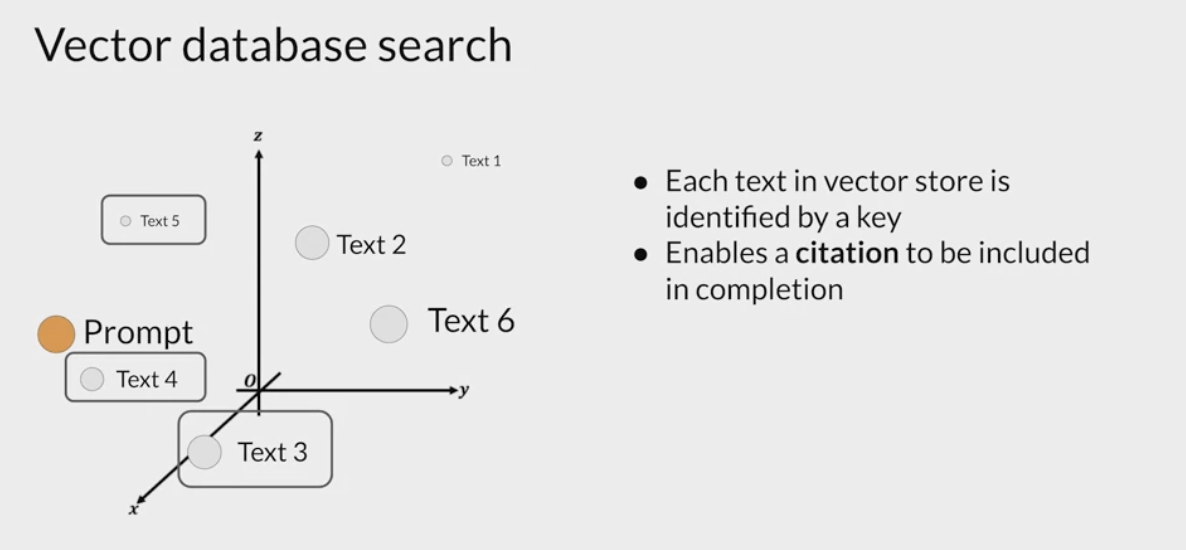

Vector Store

enable a fast and efficient kind of relevant search based on similarity.

- allow for fast searching of datasets and efficient identification of semantically related text.

important data storage strategy which contains vector representations of text.

a particularly useful data format for language models

internally they work with vector representations of language to generate text.

vector database

The most important piece of the preprocessing pipeline, from a systems standpoint

a particular implementation of a vector store where each vector is also identified by a key.

- This can allow the text generated by RAG to also include a citation for the document from which it was received.

It’s responsible for efficiently storing, comparing, and retrieving up to billions of embeddings (i.e., vectors).

The most common choice is

Pinecone. It’s the default because it’s fully cloud-hosted, easy to get started with, and has many of the features larger enterprises need in production (e.g., good performance at scale, SSO, and uptime SLAs).Open source systemslike Weaviate, Vespa, and Qdrant: They generally give excellent single-node performance and can be tailored for specific applications, so they are popular with experienced AI teams who prefer to build bespoke platforms.Local vector management librarieslike Chroma and Faiss: They have great developer experience and are easy to spin up for small apps and dev experiments. They don’t necessarily substitute for a full database at scale.OLTP extensionslike pgvector: good solution for devs who see every database-shaped hole and try to insert Postgres, or enterprises who buy most of their data infrastructure from a single cloud provider. It’s not clear, in the long run, if it makes sense to tightly couple vector and scalar workloads.

Looking ahead, most of the open source vector database companies are developing cloud offerings. Our research suggests achieving strong performance in the cloud, across a broad design space of possible use cases, is a very hard problem. Therefore, the option set may not change massively in the near term, but it likely will change in the long term. The key question is whether vector databases will resemble their OLTP and OLAP counterparts, consolidating around one or two popular systems.

the embedding pipeline may become more important over time

- how embeddings and vector databases will evolve as the usable context window grows for most models.

- It’s tempting to say embeddings will become less relevant, because contextual data can just be dropped into the prompt directly.

- However, feedback from experts on this topic suggests the opposite, that the embedding pipeline may become more important over time. Large context windows are a powerful tool, but they also entail significant computational cost. So making efficient use of them becomes a priority.

- We may start to see different types of embedding models become popular, trained directly for model relevancy, and vector databases designed to enable and take advantage of this.

Prompt construction / retrieval

Strategies for prompting LLMs and incorporating contextual data are becoming increasingly complex—and increasingly important as a source of product differentiation.

Most developers start new projects by experimenting with simple prompts, consisting of direct instructions (

zero-shot prompting) or some example outputs (few-shot prompting).- These prompts often give good results but fall short of accuracy levels required for production deployments.

The next level of prompting

jiu jitsuis designed to ground model responses in some source of truth and provide external context the model wasn’t trained on.

advanced prompting strategies

- The Prompt Engineering Guide catalogs no fewer than 12 more advanced prompting strategies, including:

- chain-of-thought, self-consistency, generated knowledge, tree of thoughts, directional stimulus, and many others.

- These strategies can also be used in conjunction to support different LLM use cases like document question answering, chatbots, etc.

Orchestration frameworks

LangChainandLlamaIndexshine.workflow:

- They abstract away many of the details of prompt chaining;

- interfacing with external APIs (including determining when an API call is needed);

- retrieving contextual data from vector databases;

- and maintaining memory across multiple LLM calls.

- They also provide templates for many of the common applications mentioned above.

Their output is a prompt, or series of prompts, to submit to a language model. These frameworks are widely used among hobbyists and startups looking to get an app off the ground .

- LangChain is still a relatively new project (currently on version 0.0.201), but we’re already starting to see apps built with it moving into production.

Some developers, especially early adopters of LLMs, prefer to switch to raw Python in production to eliminate an added dependency. But we expect this DIY approach to decline over time for most use cases, in a similar way to the traditional web app stack.

- ChatGPT.

- In its normal incarnation, ChatGPT is an app, not a developer tool. But it can also be accessed as an API.

- it performs some of the same functions as other orchestration frameworks, such as: abstracting away the need for bespoke prompts; maintaining state; and retrieving contextual data via plugins, APIs, or other sources.

- While not a direct competitor to the other tools listed here, ChatGPT can be considered a substitute solution, and it may eventually become a viable, simple alternative to prompt construction.

Prompt execution / inference

Prompt execution / inference

OpenAI- Today, OpenAI is the leader among language models. Nearly every developer starts new LLM apps using the OpenAI API with the gpt-4 or gpt-4-32k model.

- This gives a best-case scenario for app performance and is easy to use, in that it operates on a wide range of input domains and usually requires no fine-tuning or self-hosting.

When projects go into production and start to scale, a broader set of options come:

Switching to gpt-3.5-turbo: It’s ~50x cheaper and significantly faster than GPT-4. Many apps don’t need GPT-4-level accuracy, but do require low latency inference and cost effective support for free users.Other proprietary vendors (like Anthropic’s Claude models): Claude offers fast inference, GPT-3.5-level accuracy, more customization options for large customers, and up to a 100k context window (though we’ve found accuracy degrades with the length of input).Triaging requests to open source models: This can be especially effective in high-volume B2C use cases like search or chat, where there’s wide variance in query complexity and a need to serve free users cheaply.conjunction with fine-tuning open source base models, platforms like Databricks, Anyscale, Mosaic, Modal, and RunPod are used by a growing number of engineering teams.

A variety of inference options are available for open source models, including simple API interfaces from Hugging Face and Replicate; raw compute resources from the major cloud providers; and more opinionated cloud offerings like those listed above.

Open-source modelstrailproprietary offerings, but the gap is starting to close.The LLaMa models from Meta

- set a new bar for open source accuracy and kicked off a flurry of variants.

- Since LLaMa was licensed for research use only, a number of new providers have stepped in to train alternative base models (e.g., Together, Mosaic, Falcon, Mistral).

Meta is also debating a truly open source release of LLaMa2.

- When open source LLMs reach accuracy levels comparable to GPT-3.5, we expect to see a Stable Diffusion-like moment for text—including massive experimentation, sharing, and productionizing of fine-tuned models.

Hosting companies like Replicate are already adding tooling to make these models easier for software developers to consume. There’s a growing belief among developers that smaller, fine-tuned models can reach state-of-the-art accuracy in narrow use cases.

Most developers haven’t gone deep on operational tooling for LLMs yet.

- Caching is relatively common—usually based on Redis—because it improves application response times and cost.

- Tools like Weights & Biases and MLflow (ported from traditional machine learning) or PromptLayer and Helicone (purpose-built for LLMs) are also fairly widely used. They can log, track, and evaluate LLM outputs, usually for the purpose of improving prompt construction, tuning pipelines, or selecting models.

- There are also a number of new tools being developed to validate LLM outputs (e.g., Guardrails) or detect prompt injection attacks (e.g., Rebuff). Most of these operational tools encourage use of their own Python clients to make LLM calls, so it will be interesting to see how these solutions coexist over time.

the static portions of LLM apps (i.e. everything other than the model) also need to be hosted somewhere.

- The most common solutions we’ve seen so far are standard options like Vercel or the major cloud providers.

- Startups like Steamship provide end-to-end hosting for LLM apps, including orchestration (LangChain), multi-tenant data contexts, async tasks, vector storage, and key management.

- And companies like Anyscale and Modal allow developers to host models and Python code in one place.

AI agents frameworks

AutoGPT, described as “an experimental open-source attempt to make GPT-4 fully autonomous,”The in-context learning pattern is effective at solving hallucination and data-freshness problems, in order to better support content-generation tasks.

Agents, on the other hand, give AI apps a fundamentally new set of capabilities: to solve complex problems, to act on the outside world, and to learn from experience post-deployment.

- They do this through a combination of

advanced reasoning/planning, tool usage, and memory / recursion / self-reflection.

- They do this through a combination of

agents have the potential to become a central piece of the LLM app architecture

And existing frameworks like LangChain have incorporated some agent concepts already. There’s only one problem: agents don’t really work yet. Most agent frameworks today are in the proof-of-concept phase—capable of incredible demos but not yet reliable, reproducible task-completion.

LLM Tools

Medusa

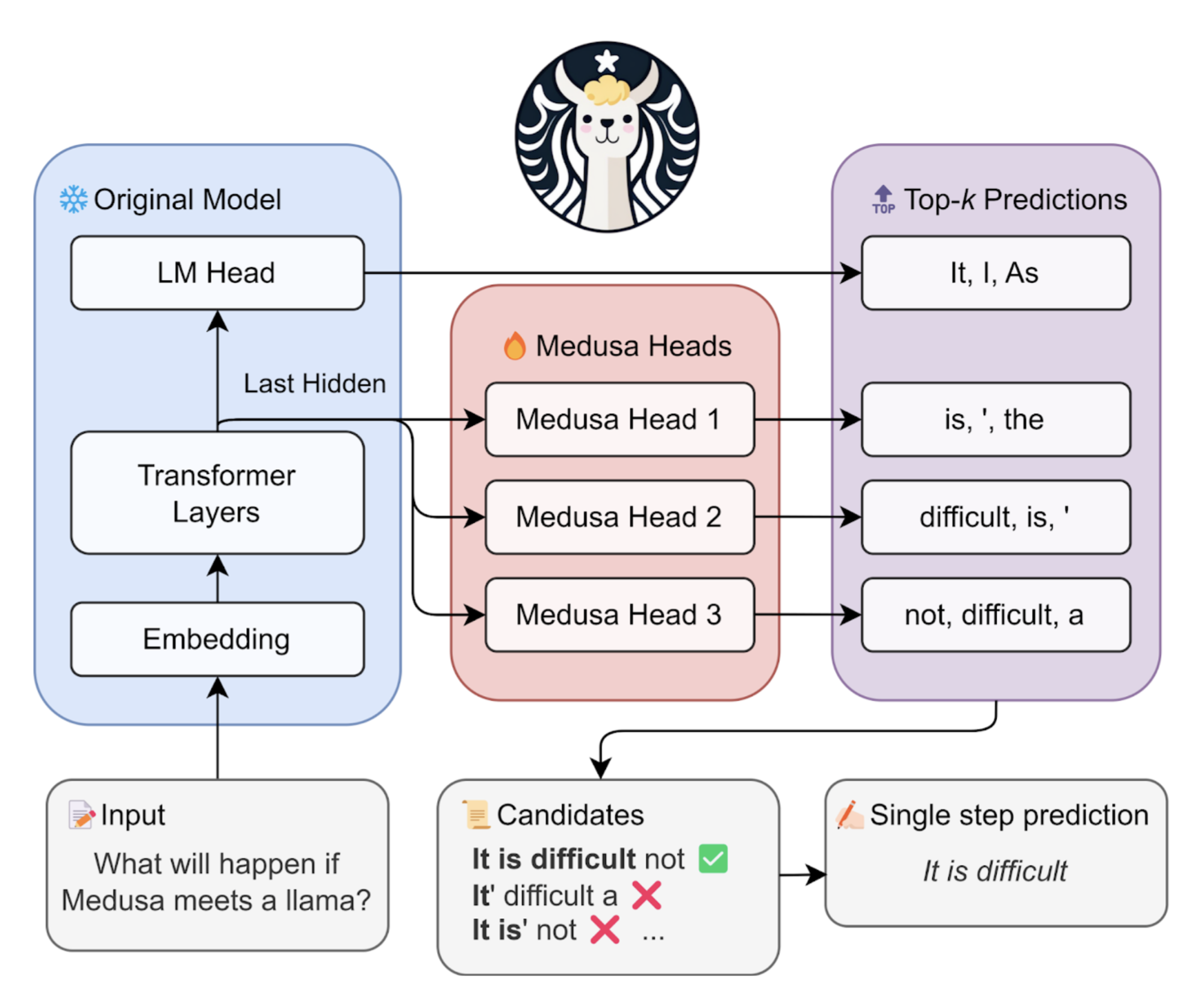

Our approach revisits an underrated gem from the paper “Blockwise Parallel Decoding for Deep Autoregressive Models” [Stern et al. 2018] back to the invention of the Transformer model. rather than pulling in an entirely new draft model to predict subsequent tokens, why not simply extend the original model itself? This is where the “Medusa heads” come in.

a simpler, user-friendly framework for accelerating LLM generation.

Instead of using an additional draft model like

speculative decoding, Medusa merely introduces a few additional decoding heads, following the idea of [Stern et al. 2018] with some other ingredients.Despite its simple design, Medusa can improve the generation efficiency of LLMs by about 2x.

These additional decoding heads seamlessly integrate with the original model, producing blocks of tokens at each generative juncture.

benefit:

Unlike the draft model, Medusa heads can be trained in conjunction with the original model (which remains frozen during training). This method allows for

fine-tuning large models on a single GPU, taking advantage of the powerful base model’s learned representations.also, since the new heads consist of just a single layer akin 类似的 to the original language model head, Medusa does not add complexity to the serving system design and is friendly to distributed settings.

On its own, Medusa heads don’t quite hit the mark of doubling processing speeds. But here’s the twist:

When we pair this with a tree-based attention mechanism, we can verify several candidates generated by Medusa heads in parallel. This way, the Medusa heads’ predictive prowess truly shone through, offering a 2x to 3x boost in speed.

Eschewing the traditional importance sampling scheme, we created an efficient and high-quality alternative crafted specifically for the generation with Medusa heads. This new approach entirely sidesteps the sampling overhead, even adding an extra pep to Medusa’s already accelerated step.

In a nutshell, we solve the challenges of speculative decoding with a simple system:

No separate model: Instead of introducing a new draft model, train multiple decoding heads on the same model.

Simple integration to existing systems: The training is parameter-efficient so that even GPU poor can do it. And since there is no additional model, there is no need to adjust the distributed computing setup.

Treat sampling as a relaxation 放松: Relaxing the requirement of matching the distribution of the original model makes the non-greedy generation even faster than greedy decoding.

The figure below offers a visual breakdown of the Medusa pipeline for those curious about the nuts and bolts.

Overview of Medusa

Medusa introduces

multiple headson top of the last hidden states of the LLM, enabling the prediction of several subsequent tokens in parallel.When augmenting a model with Medusa heads, the original model is frozen during training, and only the Medusa heads undergo fine-tuning. This approach makes it feasible to

fine-tune large models on a single GPU.During inference, each head generates multiple top predictions for its designated position. These predictions are assembled into candidates and processed in parallel using a

tree-based attention mechanism.The final step involves utilizing a typical acceptance scheme to select reasonable continuations, and the longest accepted candidate prefix will be used for the next decoding phase.

The efficiency of the decoding process is enhanced by accepting more tokens simultaneously, thus reducing the number of required decoding steps.

Let’s dive into the three components of Medusa: Medusa heads, tree attention, and typical acceptance scheme.

Medusa heads

akin to the language model head in the original architecture (the last layer of a causal Transformer model), but with a twist:

- they predict multiple forthcoming tokens, not just the immediate next one. Drawing inspiration from the Blockwise Parallel Decoding approach, we implement each Medusa head as a single layer of feed-forward network, augmented with a residual connection.

Training these heads is remarkably straightforward. either use the same corpus 本体 that trained the original model or generate a new corpus using the model itself.

Importantly, during this training phase, the original model remains static; only the Medusa heads are fine-tuned.

This targeted training results in a highly parameter-efficient process that reaches convergence 趋同 swiftly 迅速地, especially when compared to the computational heaviness 沉重 of training a separate draft model in speculative decoding methods.

The efficacy of Medusa heads is quite impressive. Medusa heads achieve a top-1 accuracy rate of approximately 60% for predicting the ‘next-next’ token.

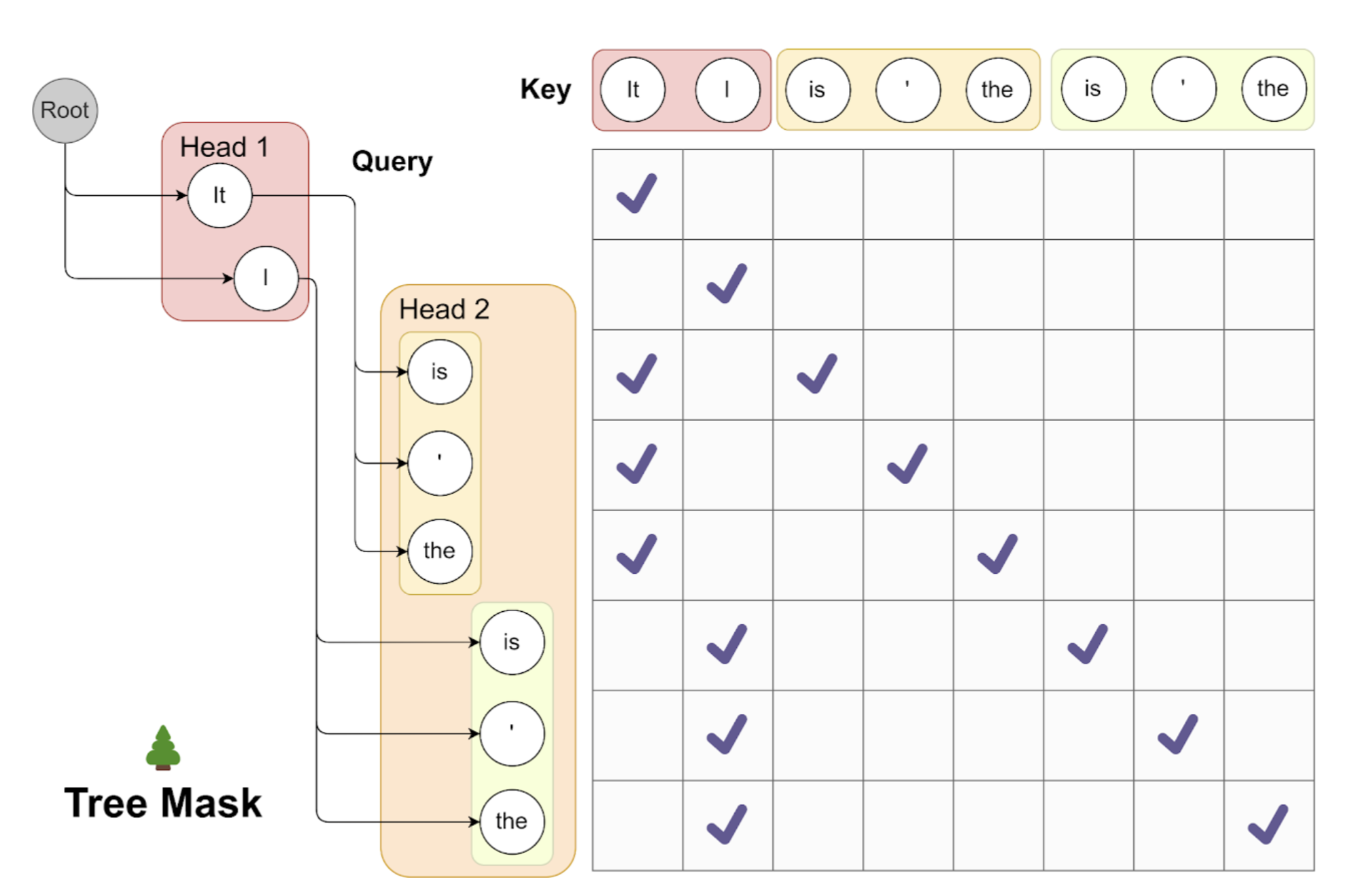

Tree attention

During our tests, we uncovered some striking metrics: although the top-1 accuracy for predicting the ‘next-next’ token hovers around 60%, the top-5 accuracy soars to over 80%.

This substantial increase indicates that if we can strategically leverage the multiple top-ranked predictions made by the Medusa heads, we can significantly amplify the number of tokens generated per decoding step.

With this goal, we first craft a set of candidates by taking the Cartesian product of the top predictions from each Medusa head.

We then encode the dependency graph into the attention following the idea from graph neural networks so that we can process multiple candidates in parallel.

Tree Attention. This visualization demonstrates the use of tree attention to process multiple candidates concurrently.

As exemplified, the top-2 predictions from the first Medusa head and the top-3 from the second result in 2*3=6 candidates. Each of these candidates corresponds to a distinct branch within the tree structure.

To guarantee that each token only accesses its predecessors, we devise an attention mask that exclusively permits attention flow from the current token back to its antecedent tokens. The positional indices for positional encoding are adjusted in line with this structure.

For example, let’s consider a scenario where we use top-2 predictions from the first Medusa head and top-3 predictions from the second

- In this case, any prediction from the first head could be paired with any prediction from the second head, culminating in a multi-level tree structure.

- Each level of this tree corresponds to predictions from one of the Medusa heads. Within this tree, we implement an attention mask that restricts attention only to a token’s predecessors, preserving the concept of historical context.

- By doing so and by setting positional indices for positional encoding accordingly, we can process a wide array of candidates simultaneously without needing to inflate the batch size.

We would also remark that a few independent works also adopt very similar ideas of tree attention [1, 2]. Compared with them, our methodology leans towards a simpler form of tree attention where the tree pattern is regular and fixed during inference, which enables a preprocessing of tree attention mask that further improves the efficiency.

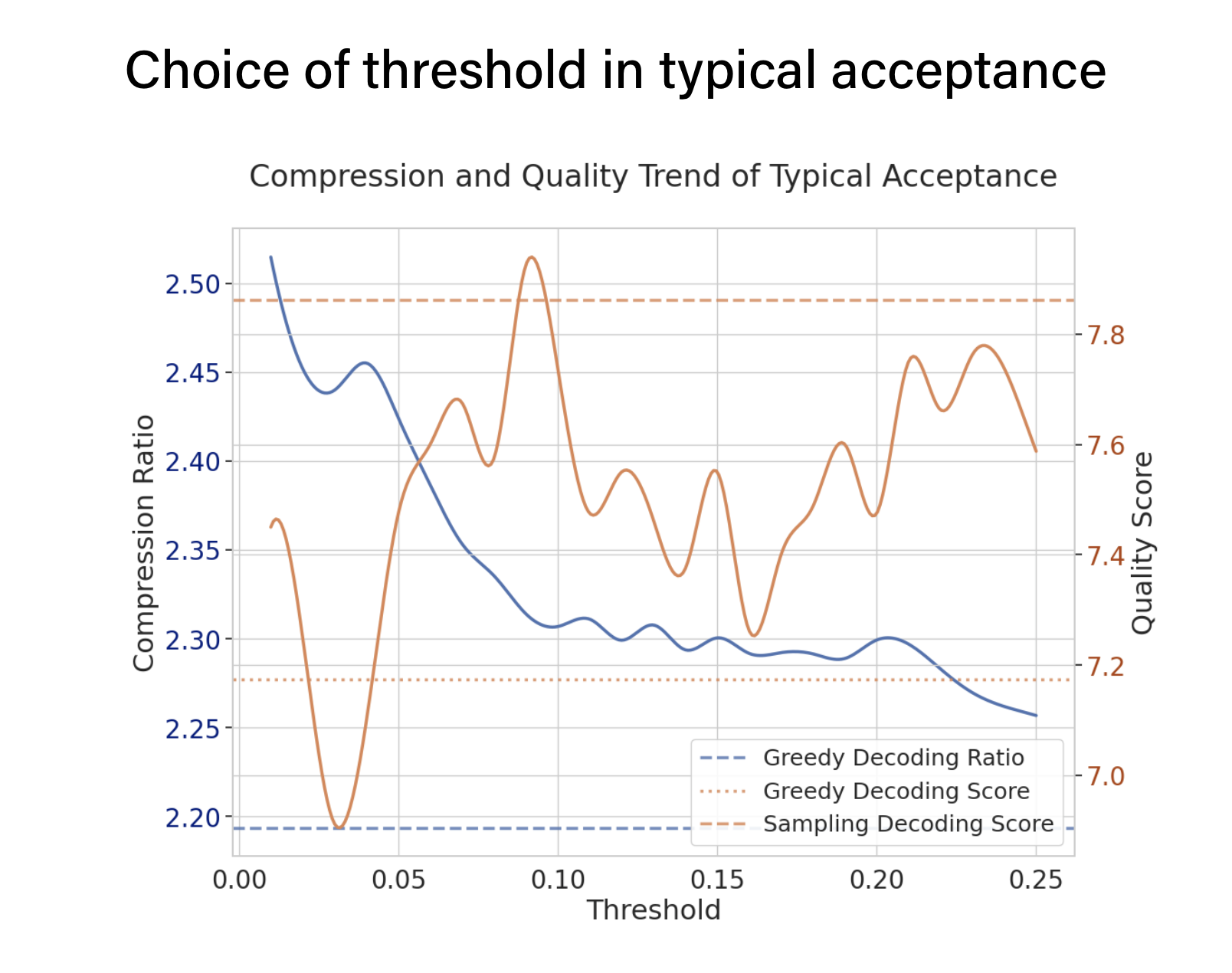

Typical acceptance

- In earlier research on speculative decoding, the technique of importance sampling was used to generate diverse outputs closely aligned with the original model’s predictions.

- However, later studies showed that this method tends to become less efficient as you turn up the “creativity dial,” known as the sampling temperature.

In simpler terms, if the draft model is just as good as the original model, you should ideally accept all its outputs, making the process super efficient. However, importance sampling will likely reject this solution in the middle.

In the real world, we often tweak the sampling temperature just to control the model’s creativity, not necessarily to match the original model’s distribution. So why not focus on just accepting plausible 貌似合理的 candidates?

We then introduce the typical acceptance scheme.

Drawing inspiration from existing work on truncation 缩短 sampling, we aim to pick candidates that are likely enough according to the original model. We set a threshold based on the original model’s prediction probabilities, and if a candidate exceeds this, it’s accepted.

- In technical jargon, we take the minimum of a hard threshold and an entropy 熵, dependent threshold to decide whether to accept a candidate as in truncation sampling.

- This ensures that meaningful tokens and reasonable continuations are chosen during decoding.

- We always accept the first token using greedy decoding, ensuring that at least one token is generated in each step.

- The final output is then the longest sequence that passes our acceptance test.

What’s great about this approach is its adaptability.

If set the sampling temperature to zero, it simply reverts to the most efficient form, greedy decoding.

When you increase the temperature, our method becomes even more efficient, allowing for longer accepted sequences, a claim we’ve confirmed through rigorous testing.

in essence, our typical acceptance scheme offers a more efficient way to generate the creative output of LLMs.

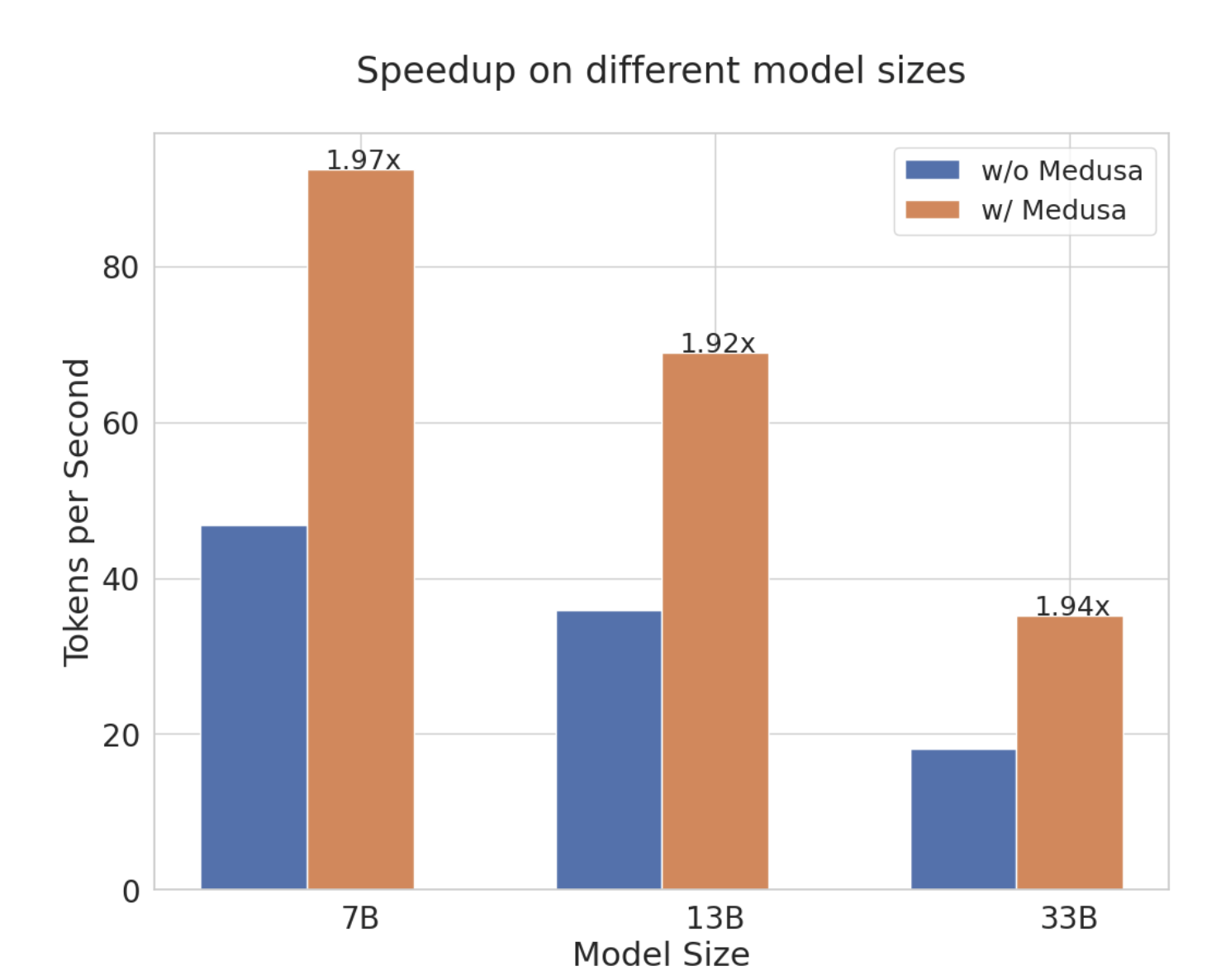

accelerate models

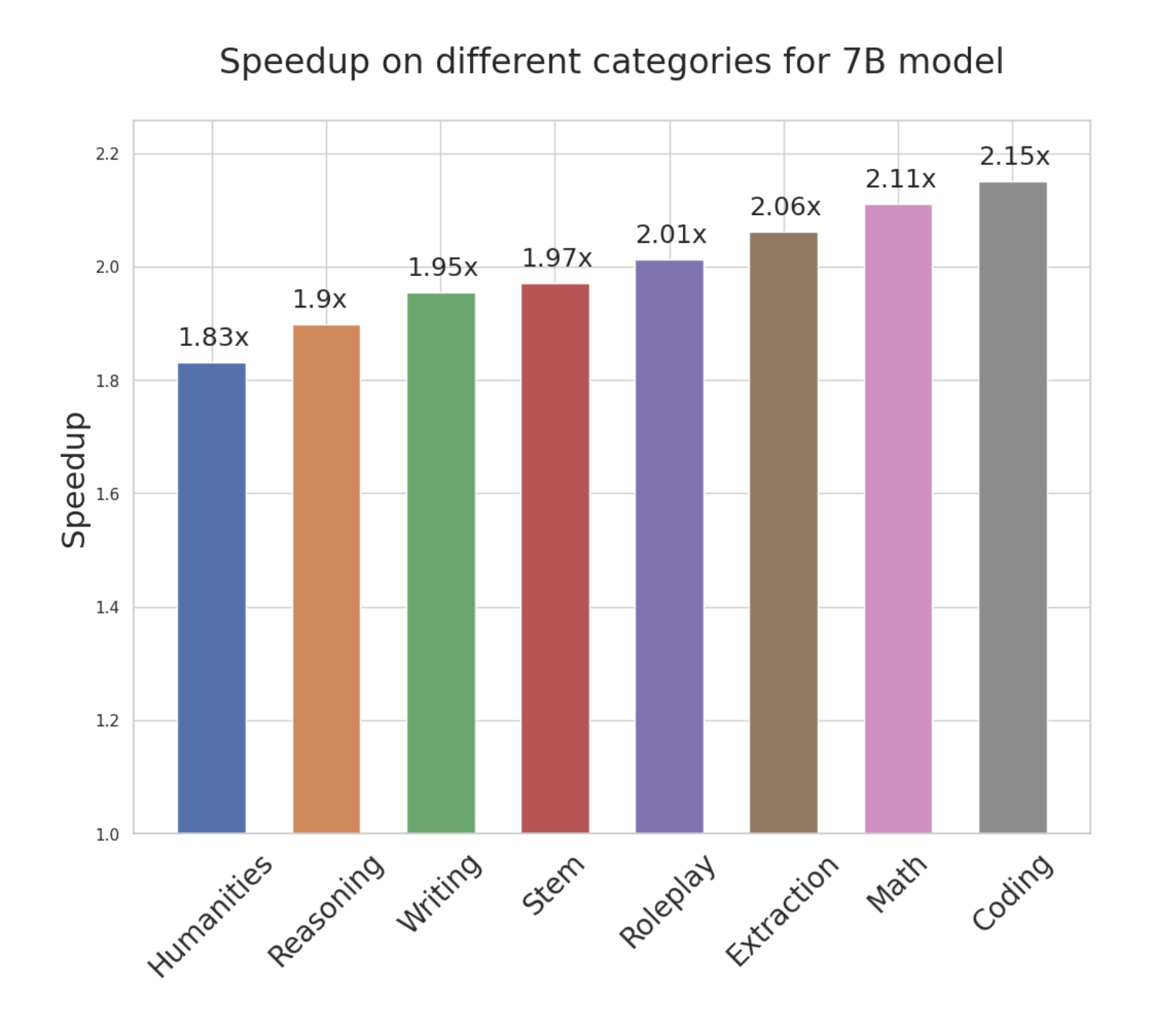

We tested Medusa with Vicuna models (specialized Llama models fine-tuned specifically for chat applications).

- These models vary in size, with parameter counts of 7B, 13B, and 33B.

- Our goal was to measure how Medusa could accelerate 加速 these models in a real-world chatbot environment.

When it comes to training Medusa heads, we opted for a simple approach. We utilized the publicly available ShareGPT dataset, a subset of the training data originally used for Vicuna models and only trained for a single epoch.

this entire training process could be completed in just a few hours to a day, depending on the model size, all on a single A100-80G GPU.

Notably, Medusa can be easily combined with a quantized base model to reduce the memory requirement. We take this advantage and use an 8-bit quantization 量化 when training the 33B model.

To simulate a real-world setting, we use the MT bench for evaluation. The results were encouraging: With its simple design, Medusa consistently achieved approximately a 2x speedup in wall time across a broad spectrum of use cases.

Remarkably, with Medusa’s optimization, a 33B parameter Vicuna model could operate as swiftly as a 13B model.

Ablation Study 消融研究

When harnessing the predictive abilities of Medusa heads, we enjoy the flexibility to select how many top candidates each head should consider.

- For instance, we might opt for the top-3 predictions from the first head and the top-2 from the second. When we take the Cartesian product of these top candidates, we generate a set of six continuations for the model to evaluate.

- This level of configurability comes with its trade-offs.

- On the one hand, selecting more top predictions increases the likelihood of the model accepting generated tokens.

On the other, it also raises the computational overhead at each decoding step. To find the optimal balance, we experimented with various configurations and identified the most effective setup, as illustrated in the accompanying figure.

- In the typical acceptance scheme, a critical hyperparameter—referred to as the

‘threshold’: whether the tokens generated are plausible based on the model’s own predictions. The higher this threshold, the more stringent the criteria for acceptance, which in turn impacts the overall speedup gained through this approach.

- We explore this trade-off between quality and speedup through experiments on two creativity-oriented tasks from the MT bench. The results, depicted in the figure, reveal that the typical acceptance offers a 10% speedup compared to greedy decoding methods. This speedup is notably better than when employing speculative decoding with random sampling, which actually slowed down the process compared to greedy decoding.

LLM Pre-Training

[./LLM/2023-04-24-LLM_Pretraining.md]

LLM Data Training

[./LLM/2023-04-24-LLM_DataTraining.md]

Confidence score for ML model

[./LLM/2023-04-24-LLM_ConfidenceScore.md]

Transparency

Transparency in the context of AI models refers to the degree to which the inner workings of the model are understandable, interpretable, and explainable to humans. It encompasses several aspects:

- Explainability:

- This refers to the ability to understand and interpret the model’s decisions.

- An interpretable model

provides clear and understandable reasonsfor its predictions or actions. - This is crucial, especially in high-stakes applications like healthcare or finance, where accountability and trust are essential.

- Visibility:

- Transparency also involves making the model architecture, parameters, and training data visible to those who are affected by its decisions.

- This allows external parties to scrutinize the model for biases, ethical concerns, or potential risks.

- Audibility:

- The ability to audit an AI model involves examining its processes, inputs, and outputs to ensure it aligns with ethical and legal standards.

- Auditing enhances accountability and helps identify and rectify issues or biases.

- Comprehensibility:

- A transparent AI model should be comprehensible to various stakeholders, including domain experts, policymakers, and the general public. This involves presenting complex technical concepts in a way that is accessible to non-experts.

- Fairness and Bias: Transparency also relates to addressing biases in AI models. Understanding how the model makes decisions can help identify and rectify biased behavior, ensuring fair treatment across diverse demographic groups.

- Transparency is crucial for building trust in AI systems, especially as they are increasingly integrated into various aspects of society. It helps users, regulators, and the general public understand how AI systems function, assess their reliability, and hold developers and organizations accountable for their impact. Various techniques and tools are being developed to enhance the transparency of AI models, but it remains an ongoing area of research and development.

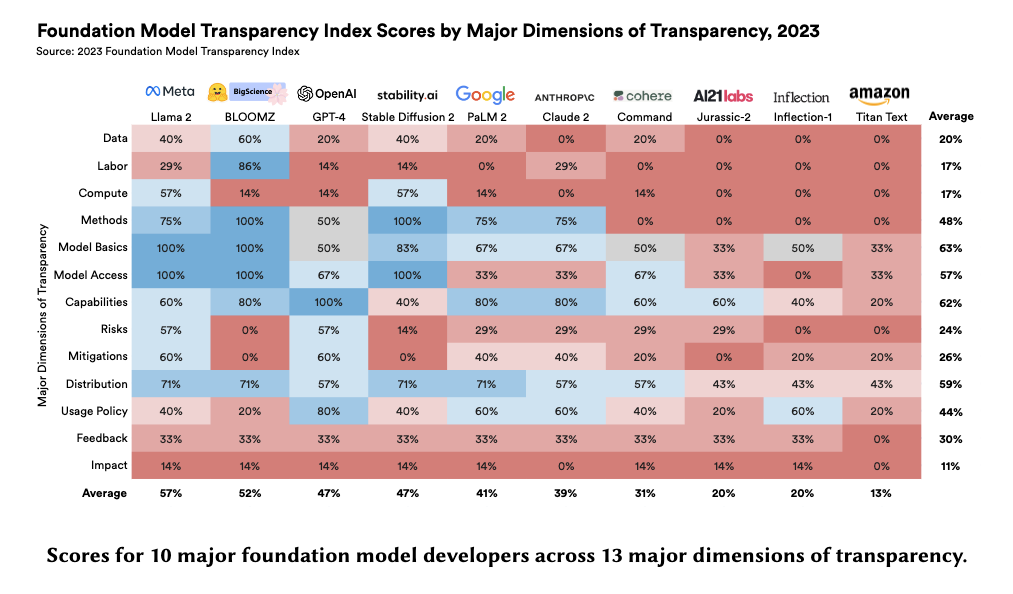

The Foundation Model Transparency Index

The Foundation Model Transparency Index 3.

Foundation models have rapidly permeated society, catalyzing a wave of generative AI applications spanning enterprise and consumer-facing contexts.

While the societal impact of foundation models is growing, transparency is on the decline, mirroring the opacity that has plagued past digital technologies (e.g. social media).

Reversing this trend is essential:

- transparency is a vital precondition for public accountability, scientific innovation, and effective governance. To assess the transparency of the founda- tion model ecosystem and help improve transparency over time, we introduce the Foundation Model Transparency Index. The 2023 Foundation Model Transparency Index specifies 100 fine-grained indicators that comprehensively codify transparency for foundation models, spanning the upstream resources used to build a foundation model (e.g. data, labor, compute), details about the model itself (e.g. size, capabilities, risks), and the downstream use (e.g. distribution channels, usage policies, affected geographies). We score 10 major foundation model developers (e.g. OpenAI, Google, Meta) against the 100 indicators to assess their transparency. To facilitate and standardize assessment, we score developers in relation to their practices for their flagship foundation model (e.g. GPT-4 for OpenAI, PaLM 2 for Google, Llama 2 for Meta). We present 10 top-level findings about the foundation model ecosystem: for example, no developer currently discloses significant information about the downstream impact of its flagship model, such as the number of users, affected market sectors, or how users can seek redress for harm. Overall, the Foundation Model Transparency Index establishes the level of transparency today to drive progress on foundation model governance via industry standards and regulatory intervention.

GenAI for Code

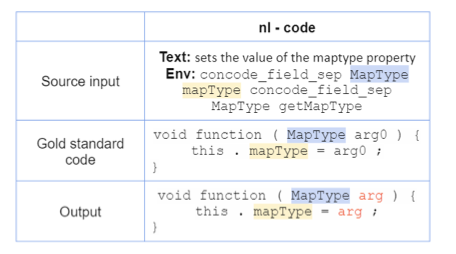

Pretrained transformer-based modelshave shown high performance innatural language generationtask. However, a new wave of interest has surged:automatic programming language generation.This task consists of translating natural language instructions to a programming code. effort is still needed in automatic code generation

When developing software, programmers use both

natural language (NL)andprogramming language (PL).- natural language is used to write documentation (ex: JavaDoc) to describe different classes, methods and variables.

- Documentation is usually written by experts and aims to provide a comprehensive explanation of the source code to every person who wants to use/develop the project.

the automation of

programming code generationfrom natural language has been studied using various techniques of artificial intelligence (AI): automatically generate code for simple tasks, while allowing them to tackle only the most difficult ones.After the big success of Transformers Neural Network, it has been adapted to many

Natural Language Processing (NLP) tasks(such as question answering, text translation, automatic summarization)- popular models are GPT, BERT, BART, and T5

One of the main factors of success: trained on very large corpora.

Recently, there has been an increasing interest in programming code generation. scientific community based its research on proposing systems that are based on pretrained transformers.

CodeGPTandGPT-adaptedare based onGPT2PLBARTis based onBART,CoTexTfollowsT5.- Note that these models have been pretrained on

bimodal data(containing both PL and NL) and onunimodal data(containing only PL).

Programming language generation is more challenging than standard text generation, PLs contain stricter grammar and syntactic rules.

an example of an input sequence received by the model (in NL), the output of the model (in PL) and the target code (called gold standard or reference code).

study from the state of the art:

initialize the model from powerful pretrained models, instead of performing a training from scratch.

- Models initialized from previous pretrained weights achieve better performance than models trained from scratch

additional pretraining

Transformers neural network improves its performance significantly from increasing the amount of pretraining datafor some specific tasks, the way to improve the model’s performance is to

pretrain it with a dataset that belongs to a specific domain,- Models such as

SciBERTandBioBERThave shown the benefits to pretrain a model using data related to a specific domain.

- Models such as

Increased data implies better training performance. This finding is intuitive since a large and diversified dataset helps improving the model’s representation.

The objective learning used during the pretraining stage gives the model some benefits when learning the downstream tasks

a low number of epochs when pretraining a model leads to higher scores in generation tasks.

scale the input and output length during the fine-tuning of the model

The input and output sequence length used to train the model matters in the performance of the model

Number of steps, when increased the length of sequences in the model, it increased the number of fine-tuning steps. a way to improve the model’s performance is by increasing the number of steps in the training.

carry out experiments combining the unimodal and bimodal data in the training

T5 has shown the best performance in language generation tasks.

Fine-tuning

additional pretraining

dataset

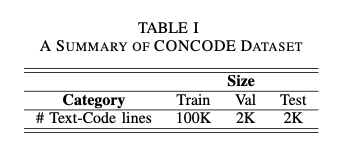

CONCODECodeSearchNet, Corpus, and GitHub Repositories.

EXPERIMENTAL

Evaluation Metrics

To evaluate the models

BLEU:a metric based on

n-gram precisioncomputed between the candidate and the reference(s).N-gram precisionpenalizes the model if:- (1) words appear in the candidate but not in any of the references, or

- (2) word appear more times in the candidate than in the maximum reference count.

- However, the metric fails if the candidate does not have the appropriate length.

- we use the

corpus-level BLEU scorein the code generation task.

CodeBLEU:- works via

n-grammatch, and it takes into account both the syntactic and semantic matches. - The syntax match is obtained by matching between the

code candidate and code reference(s) sub-treesofabstract syntax tree (AST). - The semantic match considers the data-flow structure.

- works via

Exact Match (EM):- the ratio of the number of predictions that match exactly any of the code reference(s).

usage

Juptyper

To run a shell command from within a notebook cell, you must put a ! in front of the command: !pip install hyperopt

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

!nvidia-smi --list-gpus

!pip install --upgrade pip

!pip uninstall -y git+https://github.com/openai/CLIP.git \

urllib3==1.25.10 \

sentence_transformers \

torch torchvision pytorch-lightning lightning-bolts

# install supporting puthon packages for Data Frame processing

# and for Progress Bar

!pip install numpy pandas matplotlib tqdm scikit-learn

# install only the older version of Torch

!pip install --ignore-installed \

urllib3==1.25.10 \

torch torchvision pytorch-lightning lightning-bolts

# install latest (Upgrade) sentence transformers for fine-tuning

!pip install --ignore-installed \

urllib3==1.25.10 \

pyyaml \

sentence_transformers

# Use CLIP model from OpenAI

!pip install git+https://github.com/openai/CLIP.git

# load the python package to run Pandas in parallel for better speed

!pip install pandarallel

!pip install torchaudio

!pip uninstall -y nvidia_cublas_cu11

Hugging Face

like github repo

- search for an AI model

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

# +++++ Getting started with our git and git-lfs interface

# If you need to create a repo from the command line (skip if you created a repo from the website)

pip install huggingface_hub

# You already have it if you installed transformers or datasets

huggingface-cli login

# Log in using a token from huggingface.co/settings/tokens

# Create a model or dataset repo from the CLI if needed

huggingface-cli repo create repo_name --type {model, dataset, space}

# +++++ Clone the model or dataset locally

# Make sure you have git-lfs installed

# (https://git-lfs.github.com)

git lfs install

git clone https://huggingface.co/username/repo_name

# +++++ Then add, commit and push any file you want, including larges files

# save files via `.save_pretrained()` or move them here

git add .

git commit -m "commit from $USER"

git push

# +++++ In most cases, if you're using one of the compatible libraries, the repo will then be accessible from code, through its identifier: username/repo_name

# For example for a transformers model, anyone can load it with:

tokenizer = AutoTokenizer.from_pretrained("username/repo_name")

model = AutoModel.from_pretrained("username/repo_name")

Generative AI Time Series Forecasting

Multivariate Time Series Forecasting

Generative AI Transformers for Time Series Forecasting

Falcon 40b

Chatgpt competitor - https://huggingface.co/tiiuae/falcon-40b

Power of Falcon 40b chat - https://huggingface.co/spaces/HuggingFaceH4/falcon-chat

Pre-Training - https://huggingface.co/tiiuae/falcon-40b#training-data

or https://github.com/aws-samples/amazon-sagemaker-generativeai/blob/main/studio-notebook-fine-tuning/falcon-40b-qlora-finetune-summarize.ipynb

Chat with Falcon-40B-Instruct, brainstorm ideas, discuss the holiday plans, and more!

- ✨ This demo is powered by Falcon-40B, finetuned on the Baize dataset, and running with Text Generation Inference. Falcon-40B is a state-of-the-art

large language modelbuilt by the Technology Innovation Institute in Abu Dhabi. It is trained on 1 trillion tokens (including RefinedWeb) and available under the Apache 2.0 license. It currently holds the 🥇 1st place on the 🤗 Open LLM leaderboard. This demo is made available by the HuggingFace H4 team. - 🧪 This is only a first experimental preview: the H4 team intends to provide increasingly capable versions of Falcon Chat in the future, based on improved datasets and RLHF/RLAIF.

- 👀 Learn more about Falcon LLM:

falconllm.tii.ae - ➡️️ Intended Use: this demo is intended to showcase an early finetuning of Falcon-40B, to illustrate the impact (and limitations) of finetuning on a dataset of conversations and instructions. We encourage the community to further build upon the base model, and to create even better instruct/chat versions!

- ⚠️ Limitations: the model can and will produce factually incorrect information, hallucinating facts and actions. As it has not undergone any advanced tuning/alignment, it can produce problematic outputs, especially if prompted to do so. Finally, this demo is limited to a session length of about 1,000 words.

CodeParrot

TAPEX

Give a table of data and then query

- 0 shot question (answer right away)

- fine tune: https://github.com/SibilTaram/tapax_transformers/tree/add_tapex_bis/examples

- demo: https://huggingface.co/microsoft/tapex-base

common LLM

GPT

- the greatest achievement in this field as it amassed 100 million active users in 2 months from the day of its release.

- ChatGPT

- GPT-4 by OpenAI 🔥

- programming code generation

CodeGPTandGPT-adaptedbased on GPT2CodeGPTis trained from scratch onCodeSearchNetdatasetCodeGPT-adaptedis initialized from GPT-2 pretrained weights.

LLaMA

- by Meta 🦙

AlexaTM

- by Amazon 🏫

Minerva

- by Google ✖️➕

BERT

- programming code generation:

CodeBERT SciBERT,BioBERT

BART

- programming code generation

PLBARTis based onBART,PLBARTuses the same architecture thanBARTbase.PLBARTuses three noising strategies: token masking, token deletion and token infilling.

T5

- programming code generation

CoTexTfollowsT5: based their pretraining onCodeSearchNet

JaCoText

- model based on Transformers neural network.

- pretrained model based on Transformers

- It aims to generate java source code from natural language text.

1 GPT 系列(OpenAI)

语言模型

- 语言模型是 GPT 系列模型的基座。[^通俗易懂的LLM(上篇)]

语言模型,

- 简单来说,就是看一个句子是人话的可能性。

专业一点来说

- 给定一个句子,其字符是 $W=(w_{1},w_{2},\cdots,w_{L})$

那么,从语言模型来看,这个句子是人话的可能性就是: $\begin{aligned} P(W)&=P(w_{1},w_{2},\cdots,w_{L})\ &=P(w_{1})P(w_{2} w_{1})P(w_{3} w_{1},w_{2})\cdots P(w_{L} w_{1},w_{2},\cdots,w_{L-1})\ \end{aligned}$ 但是, $L$ 太长就会很稀疏,直接算这个概率不好计算,我们就可以用近似计算: $\begin{aligned} P(W)&=P(w_{1},w_{2},\cdots,w_{L})\ &=P(w_{1})P(w_{2} w_{1})P(w_{3} w_{1},w_{2})\cdots P(w_{L} w_{1},w_{2},\cdots,w_{L-1})\ &=P(w_{1})P(w_{2} w_{1})\cdots P(w_{L} w_{L-N},\cdots,w_{L-1}) \end{aligned}$ - 这就是常说的 N-gram 统计语言模型,N 通常是 2,3,4。

- 特别的,当

N=1时,语言模型就退化为各个字符出现的概率之积。 - 当

N=4时语言模型就比较大了,实际应用中一般最大也就是 4 了。 根据条件概率 $P(w_{L} w_{L-N},\cdots,w_{L-1})$,我们就能知道给定前 N 个字,下一个字是什么字的概率了。

语言模型的评价指标可以采用 PPL(困惑度,Perplexity [^语言模型])

1.1 GPT-1, GPT-2, GPT-3

2017 年,

Google推出Transformer,利用 attention 完全替代过往深度学习中的 Recurrence 和 Convolution 结构,直白地展现出了“大一统模型”的野心,”xxx is all you need”也成了一个玩不烂的梗。- 2018 年 6 月,

OpenAI推出基于Transformer Decoder改造的第一代GPT(Generative Pre-Training),有效证明了在 NLP 领域上使用预训练+微调方式的有效性。 2018 年 10 月,

Google推出基于Transformer Encoder部分的Bert,在同样参数大小的前提下,其效果领跑于 GPT-1,一时成为 NLP 领域的领头羊。不甘示弱的 OpenAI 在 4 个月后,推出更大的模型GPT-2(GPT-1: 110M,Bert: 340M,GPT-2: 1.5B),同时,OpenAI 也知道,光靠增加模型大小和训练数据集来获得一个和 Bert 差不多效果的模型,其实是没有技术含量的。于是,在 GPT-2 里,OpenAI 引入 zero-shot 并证明了其有效性。

- 此后,OpenAI 在 LLM 上义无反顾地走了下去,在 2020 年 6 月推出巨人 GPT-3,参数量高达 175B,各类实验效果达到顶峰,据说一次训练费用为 1200w 美元,“贵”也成了普通工业界踏足 GPT 系列的壁垒之一。

GPT-1

- GPT-1 是 OpenAI 在论文《Improving Language Understanding by Generative Pre-Training》中提出的生成式预训练语言模型。

该模型的核心思想: 通过二段式的训练

- 第一个阶段是利用语言模型进行预训练(无监督形式)

- 第二阶段通过 Fine-Tuning 的模式解决下游任务(监督模式下 GPT-1 可以很好地完成若干下游任务,包括文本分类, 自然语言推理, 问答, 语义相似度等。

在多个下游任务中,微调后的 GPT-1 性能均超过了当时针对特定任务训练的 SOTA 模型。

自然语言推理(Natural Language Inference 或者 Textual Entailment): 判断两个句子是包含关系(entailment),矛盾关系(contradiction),或者中立关系(neutral);

问答和常识推理(Question answering and commonsense reasoning): 类似于多选题,输入一个文章,一个问题以及若干个候选答案,输出为每个答案的预测概率;

语义相似度(Semantic Similarity): 判断两个句子是否语义上是相关的;

- 分类(Classification): 判断输入文本是指定的哪个类别。

GPT-1 的模型结构及训练流程。

模型结构:

GPT-1 基础架构是基于

Transformer的Decoder部分,同时删除了Encoder-Decoder Attention层,只保留了Masked Multi-Head Attention层和Feed Forward层。Transformer 结构提出之始便用于机器翻译任务

- 机器翻译是一个序列到序列的任务,因此 Transformer 设计了

- Encoder 用于提取源端语言的语义特征

- Decoder 提取目标端语言的语义特征,并生成相对应的译文。

GPT-1 目标是服务于单序列文本的生成式任务,所以含弃了关于 Encoder 部分,包括 Decoder 的 Encoder-Decoder Attention 层。

整体是 12 层的 Transformer-Decoder 变体,如下图所示:

- 除此之外,GPT-1 还将 attention 的维数扩大到 768(原来为 512),将 attention 的头数增加到 12 个(原来为 8 个),将 Feed Forward 层的隐层维数增加到 3072(原来为 2048),总参数达到 110M。

- GPT-1 还优化了学习率预热算法,使用更大的 BPE 码表(词表大小为 40478,478 个 base characters + 40000 个结合的字符),激活函数 ReLU 改为对梯度更新更友好的高斯误差线性单元 GeLU,将正余弦构造的位置编码改为了带学习的位置编码。

模型训练:

GPT-1 模型训练整体上分为两步:

- 在大规模无标注文本数据上学习到一个高容量的语言模型;

- 在标注数据上进行微调。其中第二步是针对具体的下游任务来进行训练的。

无监督预训练:

- why

预训练叫做无监督训练- 因为我们其实没有标注样本,而是拿下一个词当做标签进行模型训练

- 这种方式也被称作

自监督训练。

- 总体训练任务目标是根据已知的词预测未知的词。

- 在这里设定一定的窗口大小,即根据有限的词预测下一个词:

- 给定一个语料的句子序列 $\mathcal{U}={u_{1},\cdots,u_{n}}$

已知前 $k$ 个词预测当前词 $u_{i}$,用一个标准的语言模型目标去极大化这个似然函数: $L_{1}(\mathcal{U})=\sum_{i} logP(u_{i} u_{i-k},\cdots, u_{i-1};\Theta)$ 其中:

- $k$ 是滑动窗口大小, $\Theta$ 是要优化的参数。

$P(u)$ 的计算方法是:

\[\begin{aligned} h_{0}&=UW_{e}+W_{p} \\ h_{i}&=transformer\_block(h_{i-1}), \forall i \in[1,n] \\ P(u)&=softmax(h_{n}W_{e}^{T}) \end{aligned}\]

- 其中:

- $W_{e}$ 是词向量矩阵(token embedding matrix)

- $W_{p}$ 是位置向量矩阵(position embedding matrix)

- $U=(u_{-k},\cdots,u_{-1})$ 是 tokens 的上下文向量

源代码中:

- $u_{i}$ 都是 one-hot 编码向量,相当于做一个查询操作

- $U$ 存储索引

- $W_{e}$ 存储着词向量值, $n$ 是 Decoder 层的数量。

上面是论文中的描述,举一个例子说明 GPT-1 实际上是如何进行

无监督预训练的。例如输入文本是: 【今天很开心】

- 这段文本经过切词转换为一个个 token 后,输入 GPT-1 的

transformer-decoder结构 在最后一层,会输出每个 token 对应的表征向量,即上文的 $h_{n}\in R^{m\times d}$

- 其中

- $m$ 是 token 数量,这个例子中就是 5

- $d$ 是模型维度,GPT-1 中就是 768;